As companies increasingly adopt artificial intelligence (AI) capabilities, the need for centralized management becomes essential to ensure both security and cost control in accessing AI models. In this context, AWS has launched the new Generative AI Gateway guide, which addresses these challenges by offering a unified access point that supports multiple AI providers and provides comprehensive governance and monitoring capabilities.

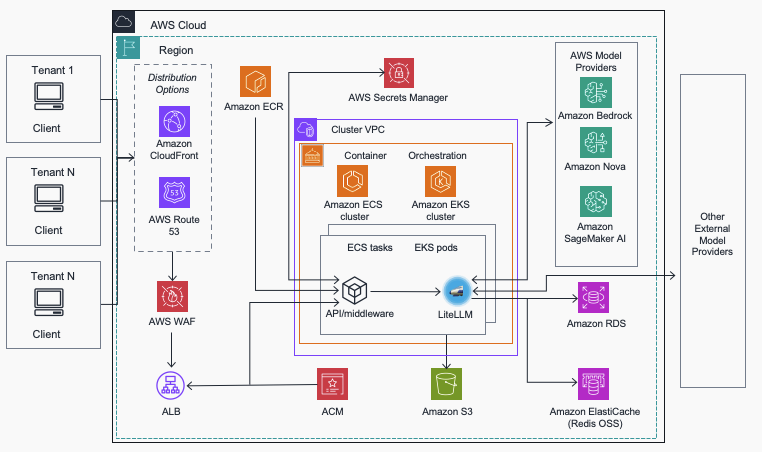

The Generative AI Gateway is presented as a reference architecture designed for organizations looking to implement end-to-end generative AI solutions. It integrates multiple models and data-enriched responses, as well as self-hosted agent skills. The solution combines direct access to models available on Amazon Bedrock, a unified developer experience for Amazon SageMaker, and robust LiteLLM management capabilities, facilitating safer and more reliable access to models from external providers.

LiteLLM is an open-source project that addresses common challenges faced by organizations when implementing generative AI workloads. Its goal is to simplify access to various models from different providers and standardize operational requirements in production, including cost management, visibility, and prompt administration. The new Multi-Provider Generative AI Gateway reference architecture provides guidance for implementing LiteLLM in an AWS environment, improving generative workload management and governance.

To scale generative AI initiatives, organizations face complex challenges such as provider fragmentation and the need to establish consistent security policies. This issue intensifies when dealing with different models that require various APIs, authentication methods, and billing structures, making a decentralized governance model difficult.

The Multi-Provider Generative AI Gateway offers centralized access that hides the complexity of managing multiple providers behind a managed interface. This allows organizations to integrate with different AI providers while maintaining centralized control, high reliability, and visibility.

The gateway supports different deployment patterns on AWS, including deployments on Amazon ECS and Amazon EKS, allowing it to adapt to various organizational needs. Additionally, it provides network architectural options that combine security and accessibility, ideal for companies needing public and global deployments as well as those requiring private internal access.

With a centralized administrative interface, the gateway also features functionalities such as user and team management, budget control, and API key management. Additionally, it supports multiple model providers, allowing customers to choose the most appropriate model for each workload regardless of its origin.

As the use of AI workloads expands, visibility requirements also grow. The architecture includes Amazon CloudWatch, enabling advanced monitoring and analysis solutions. Integration with Amazon SageMaker enhances the gateway’s capabilities, simplifying access to custom and third-party models.

With the Multi-Provider Generative AI Gateway, organizations can address their generative AI solutions in a structured manner, leveraging the AWS service ecosystem in combination with complementary open-source tools. This not only promotes better cost management and security but also enables agile and efficient implementation of AI capabilities.

Source: MiMub in Spanish