ByteDance, the company behind popular apps like TikTok, has made significant strides in its ability to process and understand videos through multimodal language models. Collaboration with Amazon Web Services (AWS) has been key in this development, allowing the company to overcome traditional challenges in video content analysis. Thanks to this innovative technology, ByteDance is able to handle billions of videos every day, ensuring not only process efficiency but also compliance with community guidelines to offer a safer experience for its users.

ByteDance’s mission is to “Inspire Creativity and Enrich Lives.” To achieve this goal, the company has created various content platforms, including CapCut and Mobile Legends: Bang Bang. Using an advanced machine learning engine, ByteDance employs sophisticated algorithms to scan and classify a vast amount of videos, identifying and flagging those that do not meet established standards. The implementation of Amazon EC2 Inf2 instances has significantly optimized this process, cutting inference costs in half.

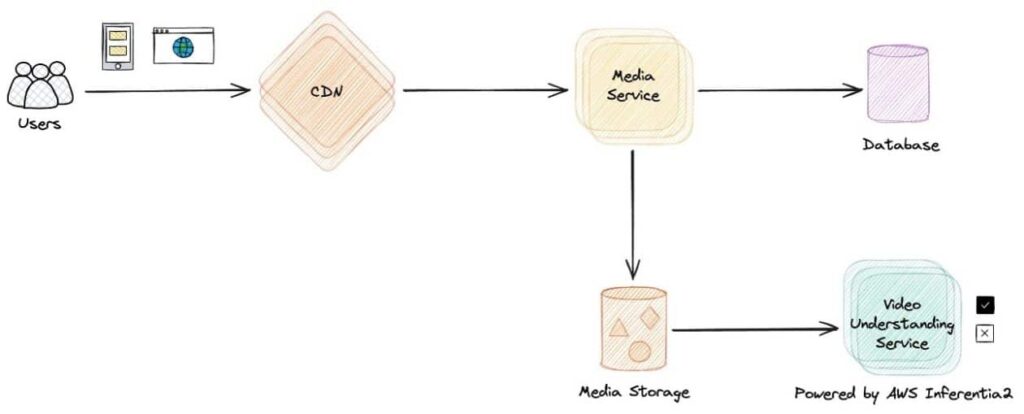

The recent integration of multimodal language models represents a significant shift in content analysis driven by artificial intelligence. These models can process different types of content, including text, images, audio, and video, allowing them to approach how humans perceive and understand information. ByteDance has developed an architecture for LLMs that maximizes performance across various applications, integrating multiple input streams to provide a deeper understanding of the content being analyzed.

Furthermore, ByteDance has implemented advanced techniques such as tensor parallelization and the use of static processing groups, resulting in significant improvements in latency and model performance. These optimizations are essential for handling the growing amount of generated content and quickly responding to market demands.

Looking ahead, ByteDance plans to develop a unified multimodal tokenizer that will allow processing all types of content within a common semantic space. This innovation has the potential to enhance efficiency and consistency in content comprehension, laying the groundwork for a more inclusive and secure system in today’s digital ecosystem.

Collaboration with AWS has been crucial not only in addressing challenges related to video analysis, but also in opening up new possibilities in the field of artificial intelligence. As ByteDance continues to expand its capabilities and experiment with new technologies, it solidifies its position as a leader in innovation in a constantly evolving digital world.

Referrer: MiMub in Spanish