Here’s the translation to American English:

—

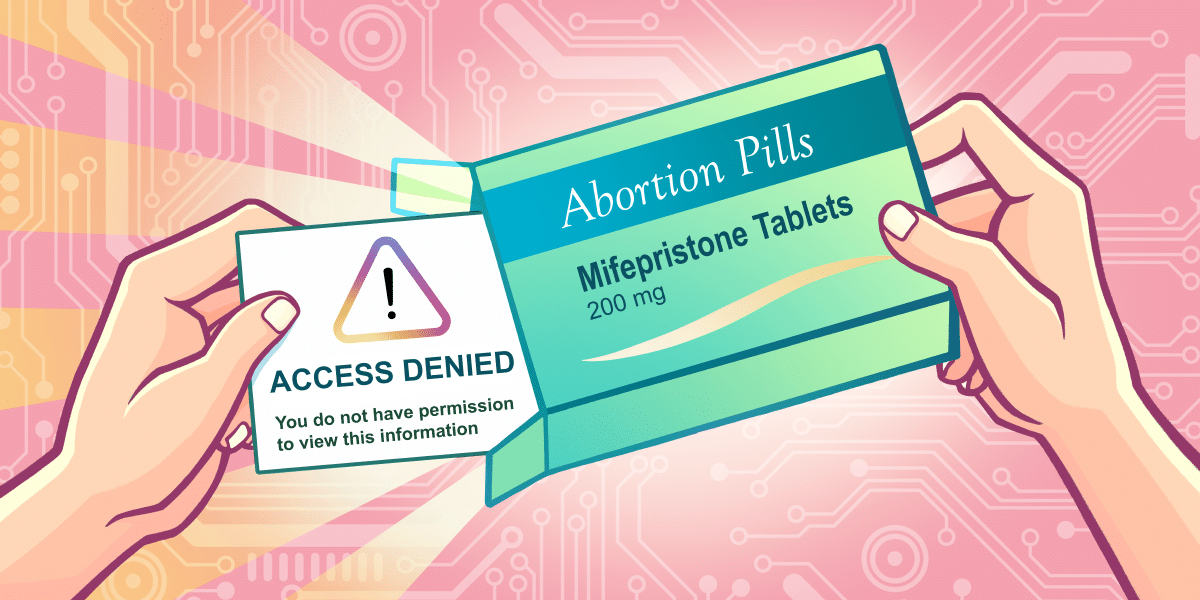

A recent initiative has raised concerns about the challenges users face when sharing information about reproductive health on social media platforms like Instagram. One case that illustrates this issue is that of Samantha Shoemaker, who shared content about access to abortion pills, including videos and resources from legitimate organizations. As a result, her material was removed for alleged violations of Meta’s policies, the parent company of Instagram.

Meta’s rules, under the “Dangerous Organizations and Individuals” (DOI) policy, originally intended to combat online extremism, have alarmingly expanded in their application. The Electronic Frontier Foundation (EFF) has pointed out that this framework has been used excessively, disproportionately affecting already marginalized voices and generating criticism for the lack of transparency in its implementation.

Despite the fact that Meta has introduced clarifications in its Transparency Center, the issues have not ceased. Shoemaker, who shared informative and factual content about abortion pills, was notified that her posts violated policies that should apply only to content related to violent extremism. This confusion is common, as the moderation process can lead to accurate medical information being mistakenly classified as illegal or dangerous activities.

The situation is particularly relevant at a time when access to abortion information is threatened by increasing political restrictions. It is imperative that platforms take cautious steps to avoid silencing discourse that is crucial for the health and well-being of many individuals. Additionally, when content is removed, it is essential that users receive clear and precise explanations regarding the reasons behind such decisions.

Those affected by the removal of their content have several options. They can appeal directly through the app, request a review by Meta’s Oversight Board, document their case, and even share their experiences with projects aimed at highlighting these injustices.

The EFF emphasizes that the dissemination of information about reproductive health is not dangerous but necessary. Platforms must facilitate access to crucial information and provide clarity on how posts are monitored, as well as the process for appealing decisions that may be unjust.

—

via: MiMub in Spanish