Here’s the translation into American English:

—

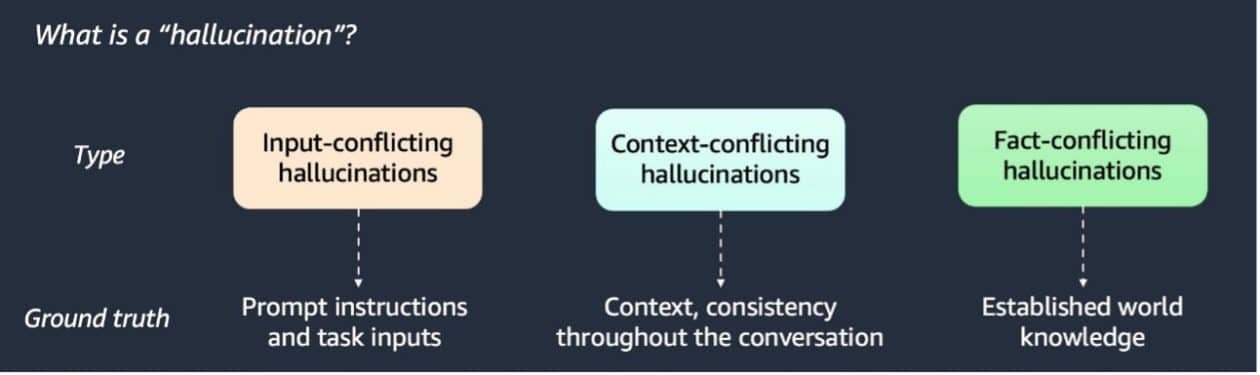

Generative artificial intelligence is experiencing significant growth, with tools like Retrieval-Augmented Generation (RAG) becoming essential for improving the accuracy and reliability of generated responses. In this context, RAG allows for the integration of additional data that was not considered during the training of language models, helping to mitigate the spread of false or misleading information—a phenomenon known as “hallucinations” in the field of AI.

As these systems become increasingly integrated into everyday life and critical decision-making processes, the ability to detect and mitigate such hallucinations becomes crucial. Currently, most detection techniques focus exclusively on the question and answer. However, the incorporation of additional context, facilitated by RAG, opens the door to new strategies that could address this issue more effectively.

Examining different methods to establish a basic hallucination detection system in RAG applications reveals the various pros and cons in terms of accuracy, recovery, and cost of each technique. New methodologies are being developed to offer accessible ways for rapid integration into RAG systems, thus increasing the quality of responses provided by artificial intelligence.

Within this framework, three types of hallucinations are identified, and multiple methodologies for their detection have been proposed, prominently featuring language model (LLM)-based detectors, semantic similarity detectors, and the stochastic BERT verifier. Results thus far have shown variations in the effectiveness of these methods, evaluated through different datasets such as Wikipedia articles and synthetically generated resources.

To implement an effective RAG system, access to tools like Amazon SageMaker and Amazon S3 is necessary, which requires an AWS account. The success of the approach hinges on storing three crucial elements: the context relevant to the user’s query, the question posed, and the response produced by the language model.

The language model-based method classifies responses from the RAG system by assessing whether there is incongruence with the context. On the other hand, semantic similarity techniques and token comparison address the detection of inconsistencies from different angles. While the stochastic BERT verifier has demonstrated high performance in information retrieval, its implementation can be costly.

Comparisons between these methods suggest that the LLM-based approach offers a favorable balance between accuracy and cost. Consequently, a hybrid approach that combines a token similarity detector with an LLM-based one is recommended to effectively address hallucinations. The need for adaptability and analysis in generative artificial intelligence applications is palpable. With the evolution of RAG tools, hallucination detection methods are becoming essential elements for improving reliability and building trust in these systems.

—

Let me know if you need further assistance!

Source: MiMub in Spanish