Sure! Here’s the translation into American English:

—

Amazon continues to expand its global impact on e-commerce, affecting the lives of millions of customers, employees, and suppliers. This growth, however, brings with it the necessity to manage risks and address claims related to workers’ compensation, transportation incidents, and other insurance matters. In this context, risk managers play a crucial role in overseeing the lifecycle of claims, generating an average of 75 documents per case, which complicates their management.

Recently, an internal team at Amazon developed an innovative AI-driven solution to optimize the processing of data associated with these claims. This system can generate structured summaries of fewer than 500 words, enhancing process efficiency while maintaining high accuracy. However, developers faced challenges regarding high inference costs and processing times that varied from 3 to 5 minutes per claim, further complicated by the addition of new documents.

In light of these challenges, the team explored models from the Amazon Nova Foundation as alternatives. Performance testing revealed that the Amazon Nova models, particularly the Nova Lite model, not only match but exceed the performance of other advanced models, offering significantly lower costs and greater speed. In fact, Amazon Nova Lite has proven to be twice as fast and 98% more cost-effective than the current model in use.

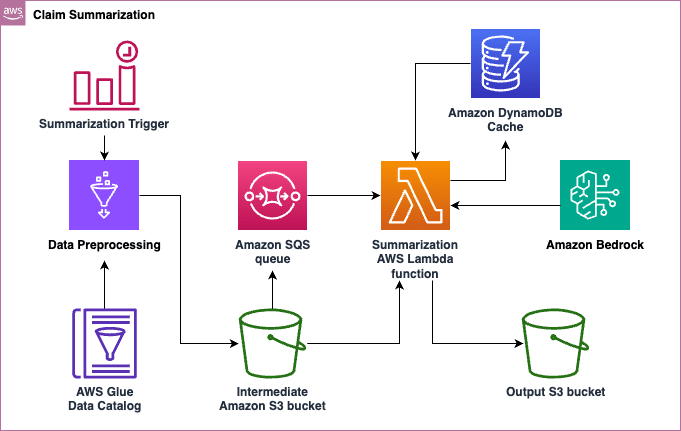

The claims summarization process begins with raw data processing using AWS Glue, followed by the storage of information in Amazon S3. Subsequently, summarization jobs are managed through Amazon SQS, and summaries are generated using AWS Lambda with base models in Amazon Bedrock. This approach allows for filtering out irrelevant data and focusing on what is truly important, optimizing both costs and performance.

The assessment of the Amazon Nova models has shown notable improvements in inference speed and cost efficiency, providing a greater margin for designing complex models and calibrating test computing. Additionally, the latency difference between Amazon Nova models and other competitors widens with long context documents, making these models a more attractive option for handling extensive documentation.

In conclusion, this initiative highlights Amazon’s ability to innovate in claims management, offering a viable solution for organizations facing the processing of large volumes of complex documentation, enhancing service quality while simultaneously reducing operational costs.

—

Let me know if you need any more help!

via: MiMub in Spanish