Sure! Here’s the translation into American English:

—

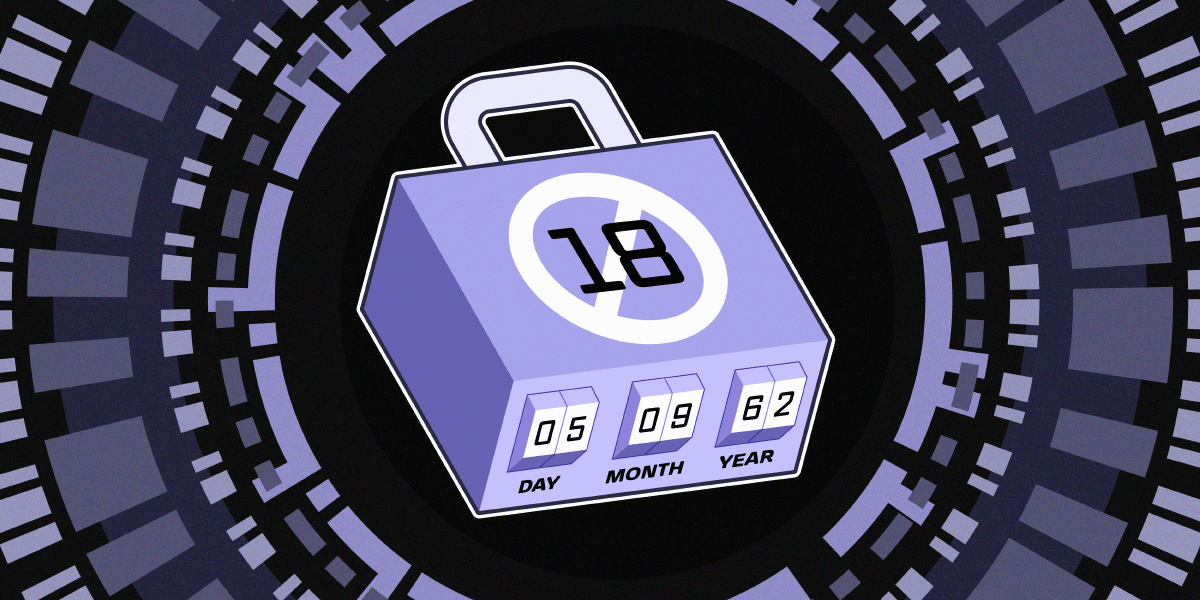

Online age verification has gained prominence in the European political debate, especially concerning the protection of minors. This discussion arises in a context where effective solutions are sought to address the risks young people face in the digital environment.

The risks are classified into three categories: content risks, conduct risks, and contact risks. The first refers to exposure to inappropriate material, such as violent or sexual content. Conduct risks encompass harmful behaviors that may arise among adolescents, such as cyberbullying and addiction to digital platforms. As for contact risks, they relate to dangerous interactions with individuals who may threaten minors’ safety, such as grooming.

However, experts on the topic have questioned the effectiveness of mandatory age verification. They argue that these measures not only restrict access based on age but also ignore the social dynamics and context in which young people interact online. A more comprehensive approach should consider how the design of digital platforms influences youth behavior, which is often a more determining factor than age itself.

Furthermore, the requirement to upload identifying documents to access content raises significant privacy concerns. This not only presents an obstacle for adults, who also require access to information, but also poses serious violations of the fundamental rights of all users, making real protection of minors more complex.

In this context, the regulatory framework of the European Union is adapting. The recent Digital Services Act (DSA) suggests that platforms must implement risk mitigation measures without necessarily resorting to age verification. It promotes enhancing user choice by providing clear options for content moderation and allowing the establishment of personalized limits.

Protecting youth privacy is key, and limiting data collection as well as prohibiting behavioral advertising are steps in the right direction. Currently, regulations prohibit advertising aimed at minors based on their behavior, but broader initiatives are being proposed to establish total bans, thereby contributing to reducing data collection.

In light of the magnitude of this challenge, it is essential to adopt a multifaceted approach. Advocating for privacy, enhancing user autonomy, and developing a content moderation system that respects everyone’s rights are vital steps in addressing the complexities of the digital environment and ensuring the online safety of minors. The issue is not limited to technological tools but also involves a strategy that considers their rights and the impact of political decisions on their development and well-being.

Referrer: MiMub in Spanish