In the current business context, companies are optimizing their artificial intelligence operations by dividing their functions into specialized teams. This strategy aims to improve the development and implementation of AI models. Currently, AI research teams are responsible for creating and refining models through various training techniques. On the other hand, hosting teams manage the deployment of these models in different environments, such as development, validation, and production.

To facilitate this process, Amazon has launched the Amazon Bedrock Custom Model Import tool. This utility allows hosting teams to import and serve custom models based on recognized architectures, such as Meta Llama 2 and Mistral, at a low on-demand cost. With this functionality, teams can integrate models containing weights in the Hugging Face safetensors format from Amazon SageMaker or Amazon S3, fostering smoother collaboration with existing base models from Amazon Bedrock.

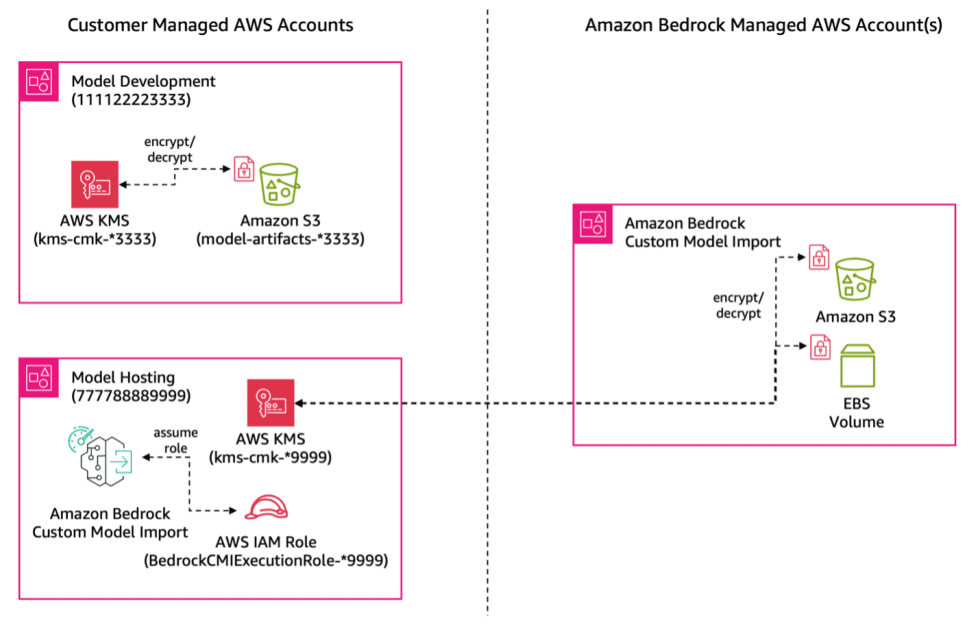

However, one of the major challenges in this ecosystem is accessing model artifacts hosted in different AWS accounts. Generally, training results, such as model weights, are stored in S3 in the account of the research team. This means that the hosting team needs to access those artifacts to carry out the deployment. This is where the capability of Amazon Bedrock Custom Model Import to facilitate access between accounts becomes crucial, allowing for direct configurations between S3 buckets and hosting accounts. This not only optimizes the operational flow but also ensures data security.

A practical case of this successful collaboration is seen in Salesforce, where the AI platform team has highlighted that this functionality has simplified configuration and significantly reduced operational burden, while still keeping models secure in their original location.

To ensure maximum efficiency and minimize risks, it is suggested to follow a series of steps that include obtaining appropriate permissions between IAM roles and configuring resource policies in both S3 buckets and AWS KMS keys. These configurations are essential not only for hosting teams to access the required models but also to ensure that each team maintains its autonomy and the necessary security controls.

This dual approach of segmenting teams and optimizing access is becoming a standard within organizations that want to fully leverage the capabilities of artificial intelligence while ensuring a high level of security and operational efficiency.

Source: MiMub in Spanish