As generative artificial intelligence continues to revolutionize all industries, the importance of effectively optimizing prompts through prompt engineering techniques has become key to efficiently balancing quality of results, response time, and costs. Prompt engineering refers to the practice of crafting and optimizing inputs for models by selecting appropriate words, phrases, sentences, punctuation, and separator characters to use base models (FMs) or large language models (LLMs) in a wide range of applications. A high-quality prompt maximizes the chances of getting a good response from generative AI models.

A fundamental part of the optimization process is evaluation, and there are multiple elements involved in evaluating a generative AI application. Beyond the most common evaluation of FMs, prompt evaluation is a critical aspect, though often challenging, in developing high-quality AI-based solutions. Many organizations struggle to consistently create and evaluate their prompts across their various applications, leading to inconsistent and undesired model performance, user experience, and responses.

In this post, we show how to implement an automated prompt evaluation system using Amazon Bedrock, so that prompt development process can be optimized and overall quality of AI-generated content can be improved. For this, we use Amazon Bedrock Prompt Management and Amazon Bedrock Prompt Flows to systematically evaluate prompts for your generative AI applications at scale.

Before explaining the technical implementation, it is crucial to briefly discuss why prompt evaluation is vital. Key aspects to consider when building and optimizing a prompt typically include:

- Quality assurance: Evaluating prompts helps ensure that your AI applications consistently generate high-quality and relevant outputs for the selected model.

- Performance optimization: By identifying and refining effective prompts, overall performance of generative AI models can be improved in terms of lower latency and, ultimately, higher performance.

- Cost efficiency: Better prompts can lead to more efficient use of AI resources, potentially reducing costs associated with model inference. A good prompt enables the use of smaller, lower-cost models that would not perform well with low-quality prompts.

- User experience: Improved prompts result in more accurate, personalized, and useful AI-generated content, enhancing the end user experience in your applications.

Optimizing prompts for these aspects is an iterative process that requires evaluation to drive adjustments in the prompts. In other words, it is a way to understand how suitable a prompt-model combination is for achieving the desired responses.

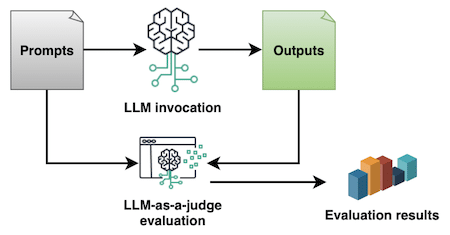

In our example, we implement a method known as LLM-as-a-judge, where an LLM is used to evaluate prompts based on the responses it produced with a particular model, according to predefined criteria. Prompt evaluation and its responses for a given LLM are subjective by nature, but systematic prompt evaluation using LLM-as-a-judge allows it to be quantified with an evaluation metric in a numerical score. This helps standardize and automate the prompt lifecycle in your organization and is one of the reasons why this method is one of the most common approaches for prompt evaluation in the industry.

We will explore an example solution for evaluating prompts with LLM-as-a-judge using Amazon Bedrock. You can also find the complete code example in amazon-bedrock-samples.

For this example, you will need:

- Access to the Amazon Bedrock service.

- Basic knowledge of Amazon Bedrock Prompt Management and Amazon Bedrock Prompt Flows.

- An AWS account with appropriate permissions to create and manage resources in Amazon Bedrock.

To create a prompt evaluation using Amazon Bedrock Prompt Management, follow these steps:

- In the Amazon Bedrock console, in the navigation panel, choose “Prompt management” and then choose “Create prompt”.

- Enter a “Name” for your prompt like “prompt-evaluator” and a “Description” like “Prompt template to evaluate prompt responses with LLM-as-a-judge”. Choose “Create”.

To create an evaluation template in the “Prompt” field, you can use a template like the following or adjust it according to your specific evaluation requirements:

You're an evaluator for the prompts and answers provided by a generative AI model.

Consider the input prompt in the <input/> tags, ...

Referrer

Configure a model to run evaluations with the prompt. In our example, we selected Anthropic Claude Sonnet. Evaluation quality will depend on the model you select at this step. Make sure to balance quality, response time, and cost appropriately in your decision.

Configure the inference parameters for the model. It is recommended to keep the Temperature at 0 for an objective evaluation and to avoid hallucinations.

You can test your evaluation prompt with sample inputs and outputs using the “Test variables” and “Test window” panels.

Set up the evaluation flow using Amazon Bedrock Prompt Flows to automate large-scale evaluation.

These practices not only improve the quality and consistency of AI-generated content but also optimize the development process, potentially reducing costs and enhancing user experiences.

Readers are encouraged to explore these features and adapt the evaluation process to their specific use cases to unlock the full potential of generative AI in their applications.

Antonio Rodriguez is a Senior Generative AI Solutions Architect at Amazon Web Services, helping businesses of all sizes address their challenges, embrace innovation, and create new business opportunities with Amazon Bedrock.

via: AWS machine learning blog

via: MiMub in Spanish