Here’s the translation:

—

The implementation of Retrieval-Augmented Generation (RAG) systems has become a crucial component for the development of advanced generative artificial intelligence applications, especially when integrating large language models (LLMs) with business knowledge. However, creating a reliable RAG pipeline presents significant challenges, as teams often need to experiment with dozens of configurations that encompass various fragmentation strategies, embedding models, retrieval techniques, and messaging designs. This process can be labor-intensive and complicated.

Managing an effective RAG pipeline is not only complex but often requires considerable manual intervention. This reality can lead to inconsistent outcomes and complicate problem-solving as well as the ability to replicate successful configurations. Teams constantly face issues such as scattered documentation concerning parameter choices, limited visibility into component performance, and the difficulty of systematically comparing approaches. Furthermore, the lack of automation creates bottlenecks that affect the scalability of RAG solutions, increasing operational burdens and complicating the maintenance of quality standards across various development and production environments.

In light of these challenges, Amazon SageMaker AI emerges as a comprehensive solution that optimizes the RAG development lifecycle, facilitating everything from experimentation to automation. This platform allows teams to conduct testing more efficiently, collaborate effectively, and foster a continuous improvement process. By combining automation with experimentation, SageMaker ensures that the entire pipeline infrastructure is versioned, tested, and promoted as a cohesive unit, providing clear guidance for traceability, reproducibility, and risk mitigation throughout the development and implementation of RAG systems.

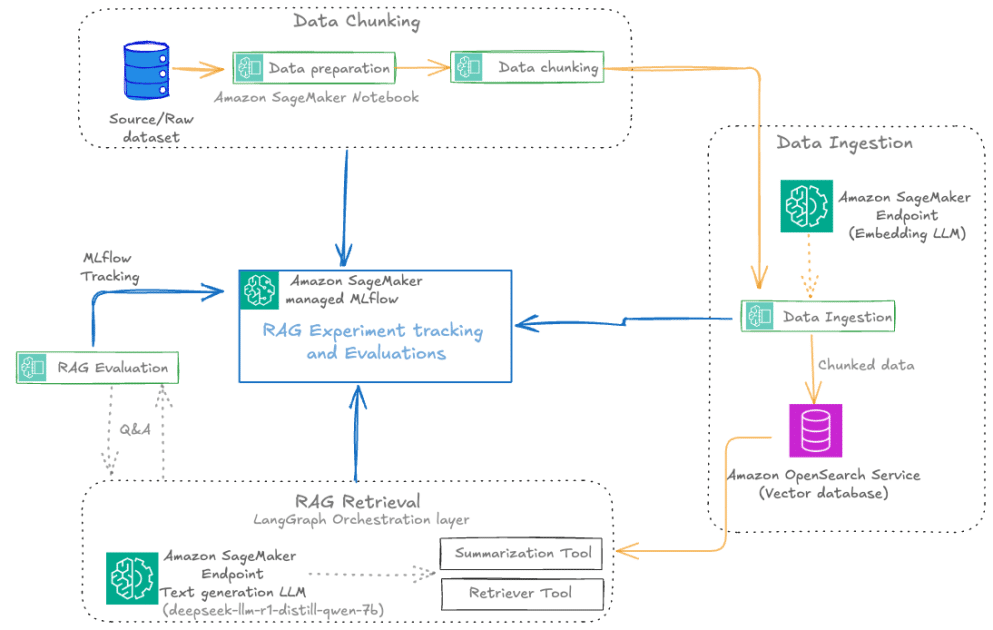

Agility in experimentation and operations is a key aspect that enables teams to utilize SageMaker AI to prototype, deploy, and monitor RAG applications at scale. Additionally, the integration of SageMaker with MLflow provides a unified framework for tracking experiments, logging configurations, and comparing results, facilitating reproducibility and proper governance of the pipeline process. Automation reduces the need for manual intervention, minimizes errors, and simplifies the promotion of RAG pipelines from the experimental phase to production.

With Amazon SageMaker Pipelines, end-to-end RAG workflows are orchestrated, covering everything from data preparation to final evaluation. The adoption of continuous integration and continuous delivery (CI/CD) practices further enhances the reproducibility and governance of pipelines, allowing for the automatic promotion of those that have been validated from development environments to production. In this scenario, it is essential to promote the entire RAG pipeline rather than just an individual subsystem to ensure consistent, quality performance, especially when working with large real-time datasets.

The solution also includes tools such as Amazon OpenSearch Service, which manages vector databases, and Amazon Bedrock, which provides LLM models. This combination allows organizations to build, evaluate, and deploy RAG pipelines at scale, facilitating automated and reproducible workflows that promote continuous optimization and ensure reliable operation in real-world contexts.

With this structured and automated approach, companies can quickly identify issues, make necessary adjustments, and improve their models efficiently. The simplicity and effectiveness offered by SageMaker, along with its ability to integrate with various tools and CI/CD practices, enable teams to make significant progress in their artificial intelligence projects.

via: MiMub in Spanish