Generative artificial intelligence is revolutionizing how businesses innovate and solve problems. As organizations progress from the experimentation phase to large-scale implementation of these technologies, there is a growing integration into their core processes. This transformation extends to various business areas and software-as-a-service (SaaS) providers. However, with the increase in the use of these tools by AWS customers, new challenges arise around the management and scalability of implementations.

The main obstacles include the need to address and reuse common concerns, such as multi-tenancy, authentication, and authorization, as well as ensuring secure networks. For SaaS providers serving multiple clients and organizations with strict compliance requirements, an architecture that includes multiple accounts is advantageous. This setup enhances organization and security within the AWS environment, allowing for more efficient management of shared needs as generative AI deployments expand.

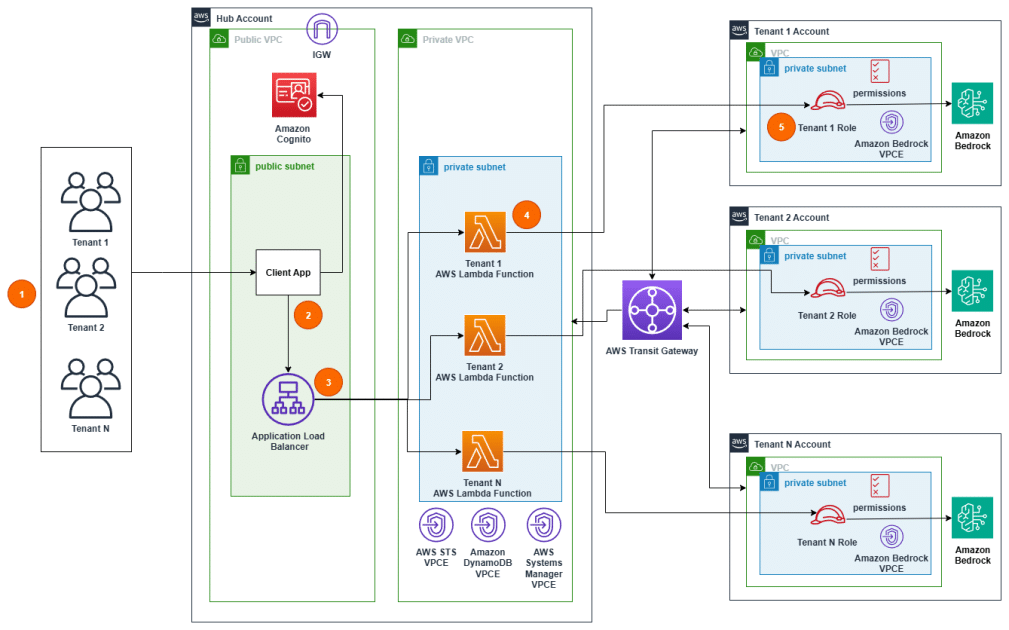

To address these needs, a two-part architectural pattern is proposed that implements a hub-and-spoke model. In the first part, a centralized hub is described that manages the abstractions of generative AI services, accompanied by spokes designed for each tenant. The use of AWS Transit Gateway facilitates interoperability between accounts and centralizes essential functions such as authentication and routing decisions.

The second part of the series will explore a variant of this architecture using AWS PrivateLink to securely share centralized access. This approach focuses on centralizing authentication, authorization, and access to models, as well as establishing secure networks between multiple accounts, facilitating the onboarding and scaling of generative AI use cases.

The proposal is based on a hub-and-spoke architecture that provides a secure and scalable system for managing generative AI implementations. In this model, the hub account acts as the center offering common services, while the spoke accounts contain tenant-specific resources, ensuring appropriate separation between them.

Among the aspects to consider in its design, the use of Lambda functions in the hub account to validate tokens and manage routing logic stands out. This strategy not only centralizes the management of business logic but also enhances monitoring and activity logging. The flexibility provided by this architecture allows organizations to adapt to their needs and potential extensions in the future.

With the rise of multi-tenant architectures and the new capabilities offered by AWS, organizations have the opportunity to create robust solutions, maximizing the potential of generative artificial intelligence while addressing the scalability and security challenges that come with its implementation.

Referrer: MiMub in Spanish