Large-scale language models have revolutionized the interaction between humans and computers, enabling more natural and fluid communication with applications. However, the actual implementation of these technologies faces significant challenges, especially when it comes to managing complex workflows and coordinating multiple artificial intelligence capabilities. An example of this would be a system that allows for efficient scheduling of medical appointments, where an artificial intelligence agent can access a calendar, verify insurance, and confirm an appointment in a single step.

Language model-based agents function as decision-making systems that control the flow within an application, but as they scale, operational issues such as inefficiencies in tool selection and context management arise. As a result, a multi-agent architecture has been proposed, which fragments the system into smaller, specialized components that work independently. This modular strategy not only improves system management but also facilitates scalability and specialization.

In this context, Amazon Web Services (AWS) has introduced capabilities that enable collaboration among multiple agents through Amazon Bedrock. This new functionality gives developers the ability to create and manage multiple artificial intelligence agents that, when working together, can effectively tackle complex tasks. Applications using this approach may experience improvements in success rates, accuracy, and productivity, especially in tasks that require multiple steps.

The planning dynamics in a single-agent system differ significantly from those in a multi-agent system. While a single agent can break down tasks into smaller sequences, in a multi-agent environment, careful management of workflows that distribute tasks among agents is required. This entails the need for an effective coordination mechanism, where each agent must align with others to achieve the common goal, posing challenges in managing dependencies and resources.

Furthermore, memory management in these systems presents key differences. In a single-agent architecture, memory is organized into three levels: short-term, long-term, and external sources. In contrast, multi-agent systems demand more sophisticated frameworks that allow for managing contextual data and synchronizing interaction histories effectively.

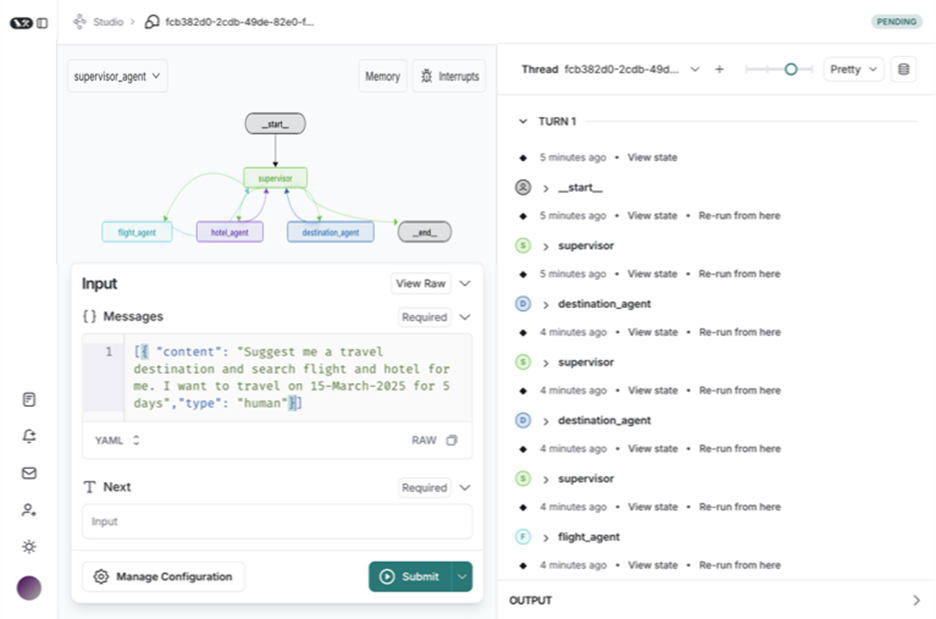

LangGraph, part of LangChain, plays a crucial role in orchestrating workflows among agents. Its graph-based architecture enables handling complex processes and maintaining context in interactions. LangGraph Studio, acting as an integrated development environment, is designed to facilitate the creation of multi-agent applications, offering tools for visualization, monitoring, and real-time debugging.

This framework also integrates state machines and directed graphs, providing detailed control over the flow and state of agent applications. It includes memory management and allows for human intervention in critical processes, further enhancing its functionality.

A highlighted practical case in the article illustrates how a supervisory agent can coordinate multiple specialized agents to create a travel assistant. This integration allows for managing tasks ranging from destination recommendations to flight and hotel searches, underscoring how multi-agent frameworks establish a strong foundation for developing advanced artificial intelligence systems. This approach promises to optimize not only the user experience but also the operational effectiveness of applications.

Referrer: MiMub in Spanish