In a technological environment where generative artificial intelligence is advancing by leaps and bounds, companies are adapting language models to meet specific needs in various areas, such as document summarization or technical content generation. This trend is driving significant innovation, allowing organizations to offer highly personalized experiences with minimal investment in technical knowledge.

However, implementing these models in business environments poses notable challenges. Many off-the-shelf AI models lack the specific knowledge necessary to adapt to certain terminologies or industry needs. To address these limitations, companies are developing large language models (LLMs) that are tailored to specific domains, optimized for specific tasks in sectors such as finance, marketing, and customer service.

The growing demand for personalized AI solutions presents an additional challenge: the efficient management of multiple models tailored to different use cases. From document analysis to generating specific emails, companies must deal with the administration of numerous fine-tuned models, which can result in increased costs and greater operational complexity. Traditional hosting infrastructures are threatened by the difficulty of maintaining performance and controlling costs.

In this landscape, an innovative solution has emerged: the Low-Rank Adaptation (LoRA) technique. This methodology allows pre-trained language models to be adapted to new tasks by adding small trainable weight matrices, significantly optimizing resources. However, the conventional method of hosting fine-tuned models proves ineffective when merging the weights of the base model, which can increase operational expenses.

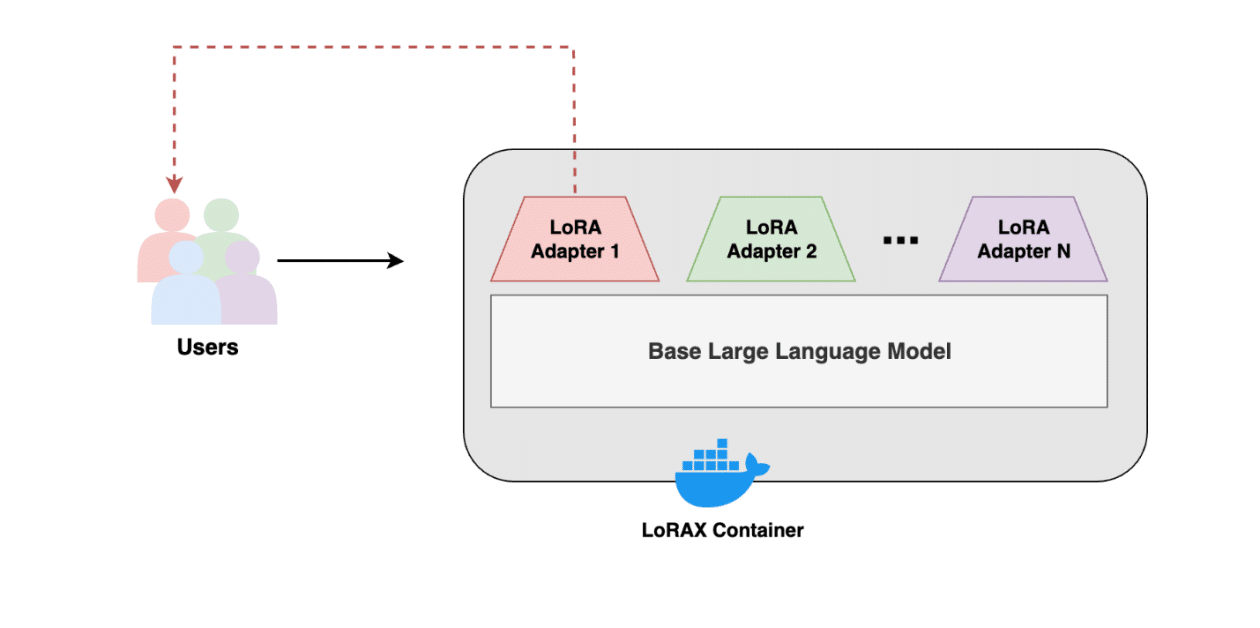

The open-source LoRAX software has emerged as an efficient alternative, allowing for weight sharing for inference and enabling cost-effective management of fine-tuned models. Thanks to LoRAX, companies can fine-tune a base model for multiple tasks and manage different variants from a single cloud instance, resulting in a considerable reduction in costs and improved performance.

The implementation of LoRAX has gained momentum on platforms like AWS, where various solutions are being developed to deploy LoRA adapters. With an active community and the ability to customize integrations, LoRAX is positioned as an effective tool for deploying generative AI models, ensuring robust support.

In the end, by adopting LoRAX, organizations not only optimize the management of large-scale models but also achieve a more precise and controlled evaluation of associated costs, allowing them to make the most of foundational models in this digital age.

Referrer: MiMub in Spanish