Companies are making progress in adopting large-scale language models (LLMs) to optimize the analysis of internal data, thanks to Amazon Bedrock, a service that offers a range of high-performance artificial intelligence models. This fully managed service provides access to innovative models from leading providers such as AI21 Labs, Anthropic, and Stability AI through a single API, emphasizing crucial aspects like security, privacy, and a responsible approach to generative artificial intelligence.

One of the challenges organizations face when implementing conversational AI is the slow processing of complex queries that require reasoning and action logic (ReAct). This obstacle can negatively impact the user experience, especially in regulated sectors with strict security requirements. A telling case occurred at a global financial services firm managing over $1.5 trillion in assets, which, despite having implemented a sophisticated conversational system, sought to improve response speed for these critical queries without compromising its security protocol.

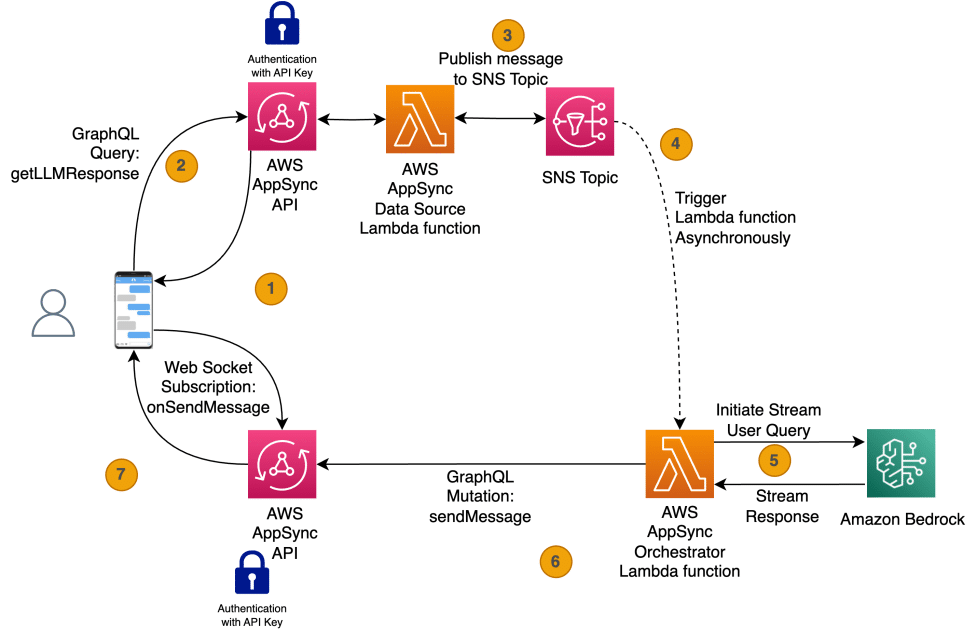

The solution to this issue lies in AWS AppSync, a service that allows developers to build serverless, real-time GraphQL APIs. By combining AWS AppSync with the streaming endpoints of Amazon Bedrock, it becomes possible to deliver incremental responses from LLMs, thereby addressing the need for speed in the context of complex queries.

The strategy includes an asynchronous approach to initiating the conversational workflow. Through an AWS Lambda function, interaction occurs with the streaming API of Amazon Bedrock, transmitting the generated tokens to the front end via AWS AppSync mutations and subscriptions. This flow ensures that when a query is made, the user receives a confirmation message almost instantly, while the complete query is processed in the background.

The detailed process involves triggering several steps to ensure that the user receives real-time responses. From the application’s startup, a WebSocket connection is established, allowing for smooth communication. After a query is made, a GraphQL function is invoked that publishes an event to Amazon Simple Notification Service (SNS), and once this notification is received, communication with the Amazon Bedrock API is initiated to obtain streaming tokens, which are sent to the user immediately.

The results of this implementation are remarkable. A financial entity reported a reduction in response times for complex queries, decreasing from approximately 10 seconds to between 2 and 3 seconds. This improvement not only optimizes user interaction but also enhances satisfaction and engagement within their applications, which can translate into a lower abandonment rate and greater customer involvement.

via: MiMub in Spanish