Sure! Here’s the translation into American English:

—

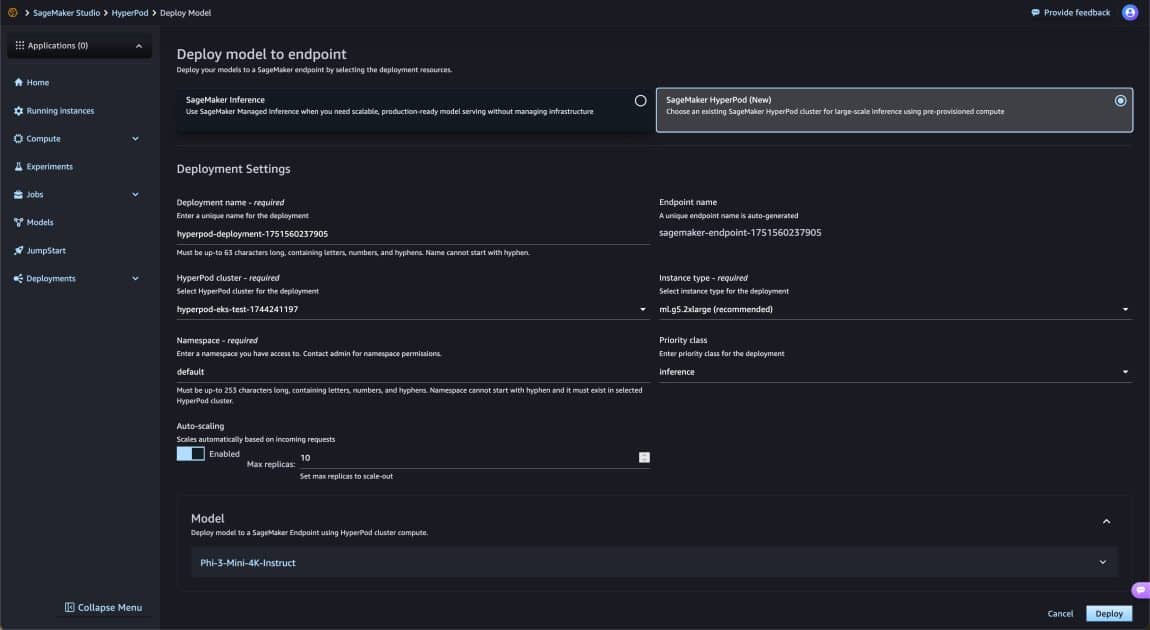

Amazon has announced a significant update today for its SageMaker HyperPod tool, which now allows users to deploy both base models from Amazon SageMaker JumpStart and custom models from repositories like Amazon S3 or Amazon FSx. This improvement marks a significant advancement for users, enabling them to train, fine-tune, and deploy their models using the same HyperPod infrastructure, thereby optimizing resource use throughout the entire model lifecycle.

Since its launch in 2023, SageMaker HyperPod has proven to be a high-performance and resilient solution suitable for large-scale model training and tuning. Model creators have adopted this tool in their quest to reduce costs, minimize downtime, and accelerate their time-to-market. Thanks to its integration with Amazon EKS, users have the ability to orchestrate their HyperPod Clusters, making resource and process management easier.

Among the new features in this release is the foundation for more agile deployment of foundational models. Users can now deploy over 400 open-weight models with a single click from SageMaker JumpStart and choose flexible options for implementing custom models from various sources. Organizations that use Kubernetes as part of their artificial intelligence strategy will benefit from more efficient workflows and simpler implementations.

The automated deployment, which adjusts according to demand, will allow models to manage traffic spikes while optimizing their performance during quieter times. Furthermore, the HyperPod task governance is designed to prioritize inference workloads, ensuring efficient utilization of available resources.

These innovations are aimed at various types of users, from system administrators to data scientists and machine learning operations engineers. The new tools and metrics will facilitate observability and management of inference workloads.

With these advancements, Amazon SageMaker HyperPod is positioned as an essential solution for organizations looking to optimize the lifecycle of their artificial intelligence models, promoting a smoother integration between training and production phases. This approach not only promises to improve operational efficiency but also to accelerate the deployment of models in production environments.

—

Let me know if you need any further assistance!

Source: MiMub in Spanish