Sure! Here’s the translation into American English:

—

The implementation of generative artificial intelligence systems as a service (SaaS) has gained notable importance in the contemporary digital economy. However, finding a balance between the scalability of these services and cost management poses a significant challenge, especially in the context of a multi-tenant generative service aimed at a diverse clientele. This challenge is intensified by the need for rigorous cost controls along with comprehensive usage monitoring.

Traditional cost management methodologies in these platforms often exhibit significant shortcomings. One of the main issues faced by operations teams is the difficulty in accurately attributing costs to each tenant, a situation further complicated by extreme variability in usage patterns. While some companies experience abrupt consumption spikes during critical moments, others maintain more consistent patterns.

To address these complications, a comprehensive solution is proposed that includes a dynamic and contextualized alert system designed to exceed conventional monitoring standards. The introduction of alert levels ranging from green (normal operations) to red (critical interventions) allows for the creation of automated and intelligent responses tailored to shifts in usage patterns. This not only prevents cost overruns but also promotes proactive resource management and precise cost allocation.

The most critical situation often arises when significant cost overruns are detected, which are typically not attributable to a single cause but rather to the confluence of multiple tenants increasing their consumption without the monitoring systems being able to anticipate the trend. Conventional alert systems, which often provide only binary notifications, may prove insufficient in these cases. This complexity increases when a tiered pricing model is applied, adapting to commitments and usage quotas. Without a sophisticated alert system that can discern between normal usage spikes and real problems, operational teams may be forced to adopt a reactive stance.

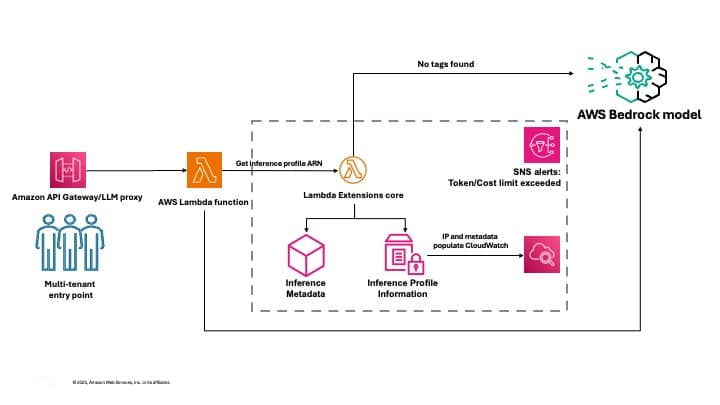

An effective strategy for optimizing cost management in the multi-tenant generative AI environment involves using inference profiles from Amazon Bedrock. These profiles facilitate meticulous cost tracking by associating metadata with each inference request, creating a logical separation between the different applications or clients utilizing foundational models. By implementing a consistent tagging strategy, it becomes possible to systematically track each tenant’s responsibility for every API call and its corresponding consumption.

The architecture of this solution enables efficient data collection and usage aggregation, storage of historical metrics for trend analysis, and the presentation of relevant insights through intuitive dashboards. This complex monitoring system provides the visibility and control necessary to manage costs associated with Amazon Bedrock while maintaining customization options tailored to each organization’s specific needs.

Implementing this solution not only facilitates tracking of model usage but also enables precise cost allocation and optimization of resource consumption among different tenants. Further adjustments and developments, grounded in feedback and observed usage patterns, will enable more effective and efficient resource management in the realm of generative artificial intelligence.

—

Let me know if you need any further assistance!

Source: MiMub in Spanish