Here’s the translation to American English:

—

Vertiv has unveiled new reference architectures that promise to revolutionize infrastructure for artificial intelligence, specifically for the NVIDIA Omniverse DSX Blueprint. This move comes in the context of increasing demand for generative AI solutions, where the company claims it will be able to reduce the deployment time, known as Time to First Token, by up to 50%.

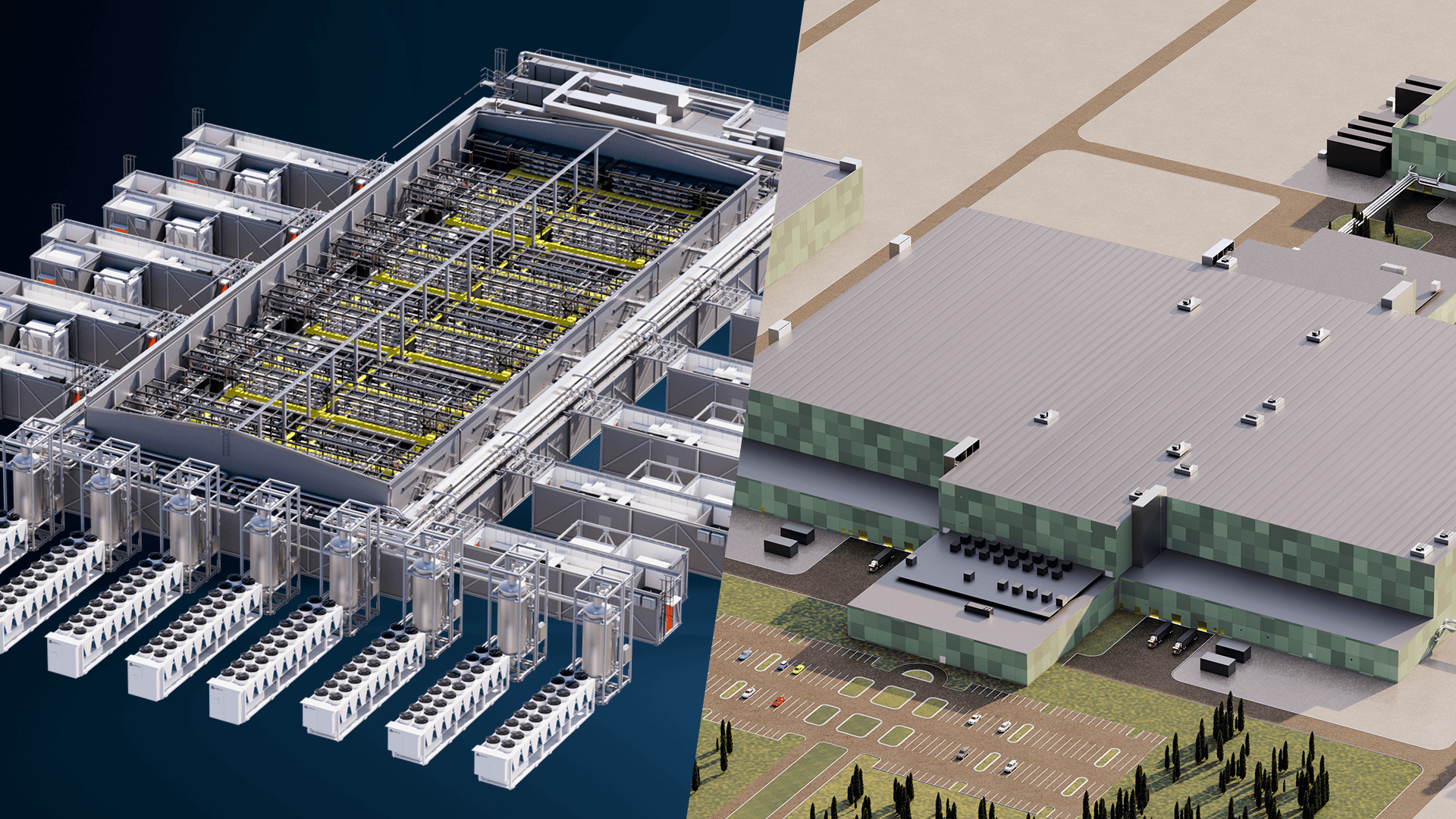

The key to this innovation is the prefabricated Vertiv™ OneCore platform, which combines computing, power, cooling, and services into a single system. This modular solution not only accelerates delivery times but also maximizes space utilization and enhances performance, adapting to each client’s individual needs.

Scott Armul, Executive Vice President of Vertiv, emphasized the importance of collaborating with NVIDIA to develop cutting-edge solutions in this growing field. The company’s reference architectures offer remarkable flexibility in deployment modes, ranging from more traditional strategies to fully prefabricated options.

A key innovation in this proposal is the grid-to-chip power system, which maximizes efficiency and reduces the physical footprint of installations. Additionally, an advanced liquid cooling solution is implemented, capable of handling the high thermal demands of accelerated computing, ensuring optimal performance for future generations of processors.

Dion Harris, Senior Director at NVIDIA, stressed that the partnership between the two companies is crucial for establishing a strong ecosystem to address the challenges posed by the growth of artificial intelligence. The combination of these flexible and rapidly deployable architectures positions Vertiv and NVIDIA at the forefront of industry transformation.

Finally, Vertiv’s new architectures are supported by a broad portfolio of power and cooling solutions, along with a global network of over 4,000 field technicians, ensuring the efficiency and scalability needed for future artificial intelligence installations.

Source: MiMub in Spanish