Sure! Here’s your text translated into American English:

—

Amazon Bedrock has launched the multimodal embedding model Cohere Embed 4, a fully managed and serverless tool promising to transform enterprise search. This model offers users the option for inference in both cross-regional and global contexts, facilitating the management of unexpected traffic spikes by utilizing computing resources across different AWS regions. Scenarios such as real-time requests and concentration of time zones are examples of events that can increase inference demand.

Designed for the analysis of business documents, Cohere Embed 4 is equipped with top-tier multilingual capabilities and features significant improvements over its predecessor, Embed 3. Its performance positions it as an ideal tool for enterprise search.

The model advances multimodal embeddings by enabling the handling of documents that combine text and images, generating unified vector representations. Additionally, it can process up to 128,000 tokens, reducing the need to split documents and simplifying data preparation. This approach also includes compressed embeddings that can cut vector storage costs by up to 83%, a crucial aspect for businesses in regulated sectors that require efficient management of unstructured documents.

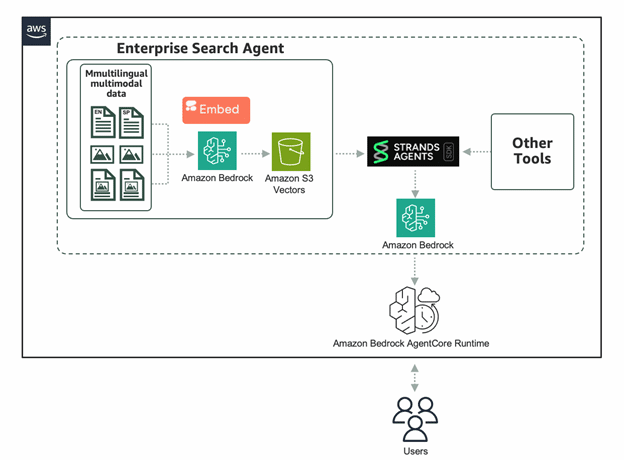

The integration of Cohere Embed 4 into applications is facilitated through the InvokeModel API and is compatible with various tools and resources on AWS, such as Strands Agents and S3 Vectors, enhancing retrieval-augmented generation (RAG) workflows.

This model not only optimizes storage efficiency but also improves generative artificial intelligence workflows in the business context. Amazon Bedrock creates a fully serverless environment, eliminating the need for infrastructure management and simplifying integration with other functionalities.

To begin using Embed 4, it is essential to verify certain requirements, such as IAM permissions and the installation of the Strands SDK, as well as configuring a bucket and a vector index in S3 for the storage and querying of vector data. The Strands Agents system offers a modular framework for efficiently developing and orchestrating artificial intelligence agents.

Once an agent is built and tested, it can be deployed in the managed environment of Amazon Bedrock AgentCore, a secure setting designed for the deployment and scaling of dynamic agents. With tools like Amazon S3 for optimized vector storage, organizations can create high-quality workflows securely without the burden of managing the underlying infrastructure.

In summary, the Embed 4 model in Amazon Bedrock represents a critical advancement for companies looking to maximize the value of their multimodal and unstructured data. With its capacity to handle large volumes of information and reduce storage costs, this model stands out as an effective solution for challenges in regulated sectors such as finance, healthcare, and manufacturing.

—

Let me know if you need any further assistance!

Source: MiMub in Spanish