In recent months, there has been a notable advancement in the development of large-scale language models, which has driven the adoption of virtual assistants in various companies. These tools have proven valuable in improving customer service and internal efficiency by using retrieval-augmented generation (RAG) models that, through powerful language models, query specific company documents to answer relevant questions.

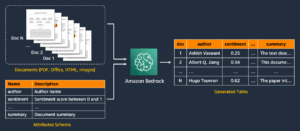

A significant advancement in this field is the availability of foundational multimodal models, capable of interpreting and generating text from images. Although their utility is general, these models are limited by the information contained in their training datasets.

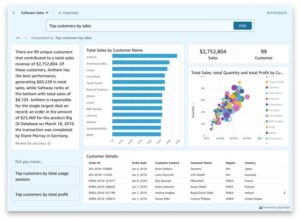

In this context, Amazon Web Services (AWS) has implemented a multimodal chat assistant using their Amazon Bedrock models. This system allows users to submit both images and questions and receive answers based on a specific set of company documents. This technology has potential applications in industries such as retail, where it could improve product sales, or in equipment manufacturing, by facilitating maintenance and repair.

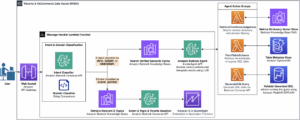

AWS’s solution begins with the creation of a document vector database using Amazon OpenSearch Service. Subsequently, the chat assistant is deployed using an AWS CloudFormation template. The system is triggered when a user uploads an image and asks a question, which are processed through Amazon API Gateway to an AWS Lambda function. This function acts as the core processing unit, storing the image in Amazon S3 for future analysis. Lambda then coordinates calls to Amazon Bedrock models to describe the image, generate a representation of the question and description, retrieve relevant data from OpenSearch, and generate a response based on the documents. The query and response are stored in Amazon DynamoDB, along with the image ID in S3.

This implementation allows companies to obtain precise and contextualized answers based on their specific data, improving user experience and increasing operational efficiency. Furthermore, the solution offers personalization and scalability, allowing companies to tailor the assistant to their needs and explore new ways of human-machine interaction.

A standout use case is in the automotive market, where users can upload vehicle images and make queries based on their own database of car listings. This demonstrates the diverse applicability of the technology, with the potential to transform the way companies interact with their customers in different sectors.

Source: MiMub in Spanish