In the dynamic field of artificial intelligence, the need to adapt language models for specific sectors has become a crucial priority. While large models are excellent at handling a wide range of natural language tasks, their effectiveness diminishes when faced with specialized tasks in domains with specific terminology. This gap is particularly notable in the automotive industry.

In the automotive sector, accurate diagnostics often depend on specific codes provided by manufacturers, known as Diagnostic Trouble Codes (DTCs). These codes, such as the P0300 indicating a generic ignition failure in the engine, or the C1201 related to ABS system faults, are critical for providing an appropriate diagnosis. However, when users do not provide these codes, general language models may generate inaccurate or irrelevant responses, hallucinating diagnoses that do not correspond to the reality of the problem.

This highlights the importance of customizing these models for industries like automotive, where accuracy is essential. Users often describe problems vaguely, such as an “engine running irregularly,” without providing specific details like DTCs. This can lead generative models to propose a wide range of possible causes, many of them incorrect, due to lack of proper context.

A viable solution is the use of Small Language Models (SLMs), which are more cost-effective and efficient to train and deploy compared to their larger counterparts. Ranging from 1 to 8 billion parameters, these models are designed for specialized tasks and can effectively function on devices with limited resources. Techniques like Low-Rank Adaptation (LoRA) have made it easier to customize these models, allowing companies to tailor linguistic models for specific industry conditions.

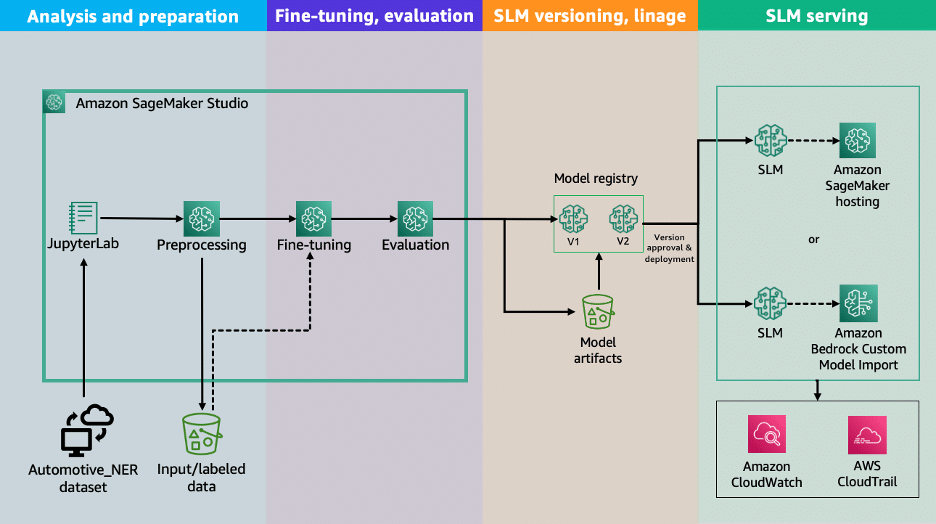

Amazon Web Services (AWS) offers robust tools for working with language models, such as Amazon Bedrock and Amazon SageMaker. These services enable companies to build, train, and deploy both large-scale and customized language models, optimized for specific needs.

Through a case study, AWS has demonstrated how to customize a small language model for the automotive sector, focusing on diagnostics through question and answer tasks. The process ranges from data analysis to model deployment and evaluation, highlighting the advantages of using SLMs compared to general-purpose models.

This initiative is not only applicable to the automotive sector but can also be adapted to other fields that require special treatment due to their terminology and specific processes. The ability to customize language models presents an effective solution to improve the accuracy and relevance of generated responses, ensuring that AI technologies are increasingly useful and tailored to the needs of different industries.

Source: MiMub in Spanish