In the fast-paced and ever-changing landscape of digital marketing, Zeta Global stands out with its innovative advances in artificial intelligence (AI). The company, a leader in cloud-based data-driven marketing technology, has transformed the way brands connect with their audiences through solutions that are not only innovative, but also extremely effective.

Over the past few years, Zeta Global has developed 30 patents, both pending and issued, primarily related to the application of deep learning and generative intelligence in marketing technology. From its early adoption of Large Language Models (LLM) technology, Zeta launched Email Subject Line Generation in 2021, a tool that allows marketers to create captivating subject lines that significantly increase open rates and engagement, perfectly adapting to audience preferences and behaviors.

Another standout innovation is the development of AI Lookalikes, technology that enables companies to identify and target new customers who closely resemble their best existing customers, optimizing marketing efforts and improving return on investment (ROI). The backbone of these advances is ZOE, Zeta’s Optimization Engine, a multi-agent LLM application that integrates multiple data sources to provide a unified view of the customer, simplify analytical queries, and facilitate the creation of marketing campaigns.

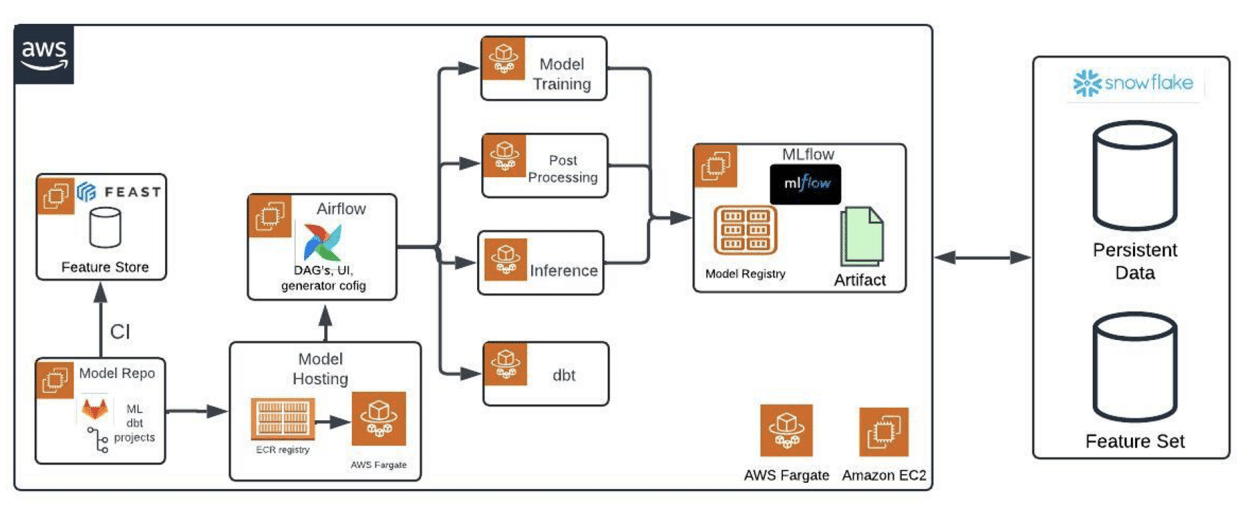

Additionally, Zeta Global has leveraged Amazon Elastic Container Service (Amazon ECS) along with AWS Fargate to efficiently deploy a multitude of smaller models. This approach allows the company to manage machine learning operations (MLOps) efficiently and at scale, from data ingestion to model deployment, integrating key tools like Airflow, Feast, dbt, and MLflow into its platform.

Zeta’s MLOps architecture is designed to automate and monitor all stages of the machine learning lifecycle. It uses Airflow for workflow orchestration, Feast for feature management, dbt for data transformation, and MLflow for experiment tracking and model management. These components operate in an Amazon ECS environment, providing a scalable, serverless platform where ML workflows run in containers using Fargate.

By using Amazon ECS with Fargate, Zeta has been able to eliminate the need to manage underlying infrastructures, facilitating application deployment and optimizing resource utilization. This adds advantages in terms of scalability, cost efficiency, and security, promoting smooth operations aligned with market demand.

In 2023, Zeta Global introduced a structural change to its machine learning teams, transitioning from traditional vertical teams to a more dynamic horizontal structure focused on pods with diverse skills. This change aimed to accelerate project delivery by fostering collaboration and synergy among teams with different specialties.

With the continuous increase of AI and ML applications across different teams, the need for a centralized MLOps platform became evident. To address these challenges, Zeta developed an MLOps platform based on key open-source tools like Airflow, Feast, dbt, and MLflow, hosted on Amazon ECS with tasks running on Fargate. This platform simplifies the ML workflow, from data ingestion to model deployment, facilitating more efficient and scalable management of ML projects.

Zeta’s future strategy includes expanding BYOM (Bring Your Own Model) capabilities for external clients and reducing the learning curve for data scientists. This involves developing standardized APIs for integrating external models, creating a unified interface to simplify interactions with specialized tools, and providing comprehensive training and support resources.

In summary, the integration of tools like Airflow, Feast, dbt, and MLflow into an MLOps platform hosted on Amazon ECS with AWS Fargate emerges as a robust solution for managing the ML lifecycle at Zeta Global. This setup not only streamlines operations, but also enhances scalability and efficiency, allowing data science teams to focus on innovation rather than infrastructure management.

Referrer: MiMub in Spanish