The recent evolution of generative artificial intelligence has significantly complicated the process of building, training, and deploying machine learning models. This complexity requires an unprecedented level of specialization, access to large datasets, and the management of extensive computing clusters. In addition, many companies are faced with the challenge of developing specialized code for distributed training, continuously optimizing their models, and addressing hardware problems, choosing to tackle these challenges within tight deadlines and budgets.

To simplify this landscape, Amazon Web Services (AWS) has announced Amazon SageMaker HyperPod, a tool that promises to transform the way companies approach artificial intelligence development and deployment. At the AWS re:Invent 2023 conference, Andy Jassy, CEO of Amazon, highlighted that this innovation accelerates the training of machine learning models by distributing and parallelizing workloads across multiple advanced processors, including AWS’s Trainium chips and GPUs. Additionally, HyperPod continuously monitors the infrastructure for issues, automatically repairing them and ensuring smooth resumption of work.

The new features introduced at AWS re:Invent 2024 make SageMaker HyperPod a solution that meets the demands of modern artificial intelligence workloads. It offers persistent and optimized clusters for distributed training and accelerated inference. This tool is being adopted by renowned startups such as Writer and Luma AI, as well as by large corporations like Thomson Reuters and Salesforce, who have significantly sped up the development of their models.

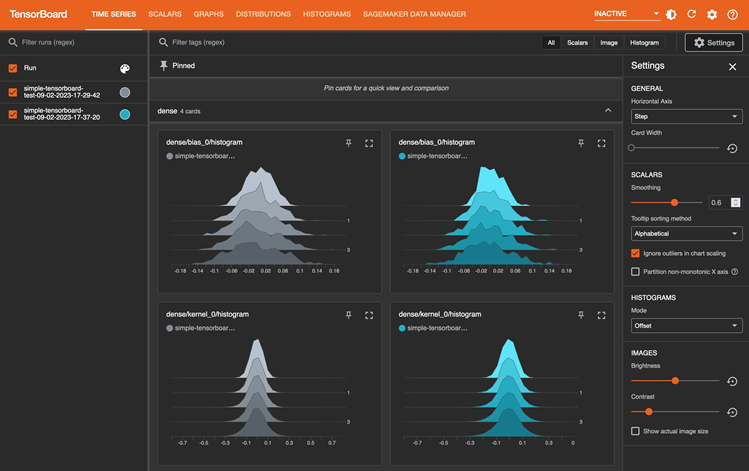

Furthermore, SageMaker HyperPod provides detailed control of the infrastructure, allowing secure connections for advanced training and resource management through Amazon EC2. By maintaining a set of dedicated instances and reservations, the tool minimizes downtime in critical situations. By using orchestration tools like Slurm and Amazon EKS, developers can optimize job management and resource usage.

From a resource management perspective, organizations face significant challenges in trying to govern the use of computing capacity essential in model training. SageMaker HyperPod enables companies to maximize resource utilization, prioritize essential tasks, and prevent underutilization. This approach can not only reduce model development costs by up to 40%, but also relieve administrators of constantly redistributing resources.

The implementation of flexible training plans within SageMaker HyperPod gives customers the ability to specify completion dates and the maximum resource capacity needed, simplifying resource acquisition and saving weeks in preparation. For example, Hippocratic AI, focused on healthcare, has leveraged these plans to efficiently access powerful EC2 P5 instances, facilitating the development of their flagship language model.

In summary, SageMaker HyperPod represents a radical shift in artificial intelligence infrastructure, focusing on intelligent and adaptable resource management. This allows organizations to maximize efficiency and reduce costs. With its focus on integrating both training and inference infrastructures, this service promises to optimize the artificial intelligence lifecycle from development to real-world implementation, playing a crucial role in the ongoing evolution of this technology.

via: MiMub in Spanish