Generative AI is revolutionizing the way we interact with technology. From chatbots that converse like humans to image generators that produce stunning visuals, this amazing technology is transforming our world. But behind these amazing capabilities lies a vast computational infrastructure loaded with technical complexities that often go unnoticed.

In this article, we will explore the realm of High-Performance Computing (HPC) and the challenges involved in producing generative artificial intelligence applications like digital twins. We will also analyze the explosive growth in computing demand, the limitations of traditional HPC setups, and the innovative solutions emerging to overcome these obstacles.

Allow me to introduce myself briefly. I am Santosh, with a background in physics. Currently, I lead research and product development at Covalent, where we focus on orchestrating large-scale computing for artificial intelligence, model development, and related domains.

Generative artificial intelligence is making its way into numerous domains, being used in climate technologies, healthcare technologies, software and data processing, business artificial intelligence, and robotics and digital twins. Today, we will focus on the latter.

A digital twin is a virtual representation of a physical system or process. It involves collecting mathematical data from the real system and feeding it into a digital model.

For example, in robotic and manufacturing applications, we can imagine a large factory with numerous robots operating autonomously. Computer vision models track the locations of robots, people, and objects within the facility, with the aim of feeding this numerical data into a database that an AI model can understand and reason with.

With this virtual replica of the physical environment, the AI model can understand the real-time scenario unfolding. If an unexpected event occurs, such as a box falling off a shelf, the model can simulate multiple future paths for the robot and optimize its recommended course of action.

Another powerful application is in healthcare. Patient data, coming from vital signs and other medical readings, could feed a foundational model that provides guidance and real-time recommendations to doctors based on the patient’s current condition.

However, bringing this concept to real production or healthcare environments presents numerous technical challenges that must be addressed.

Let’s now turn to the computational power behind these advanced AI applications. A few years ago, giants like Walmart were the biggest spenders on cloud computing services from providers like AWS and GCP, investing hundreds of millions of dollars each year. However, in recent years, new AI startups have emerged as the biggest consumers of these resources.

For example, training ChatGPT-3 in 2022 cost approximately 4 million dollars just in computational power. Its successor, ChatGPT-4, rose to an estimated 75 million dollars in computing costs. And the recently launched Google’s Gemini Ultra accumulated nearly 200 million dollars in computational spending.

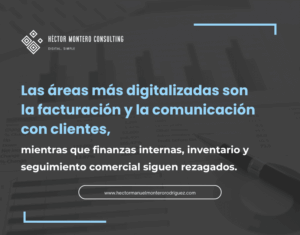

Source: MiMub in Spanish