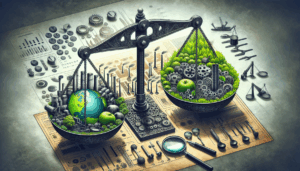

The proliferation of Large Language Models (LLMs) in enterprise IT environments presents new challenges and opportunities in terms of security, responsible artificial intelligence (AI), privacy, and prompt engineering. Risks associated with the use of LLMs, such as biased outputs, privacy violations, and security vulnerabilities, must be mitigated. To address these challenges, organizations must proactively ensure that their use of LLMs aligns with broader principles of responsible AI and prioritize security and privacy. When working with LLMs, organizations should define goals and implement measures to enhance the security of their LLM implementations, just as they do with applicable regulatory compliance.

This involves deploying robust authentication mechanisms, encryption protocols, and optimized prompt designs to identify and counteract prompt injection attempts, prompt filtering, and jailbreaking, which can help increase the reliability of AI-generated outputs in relation to security.

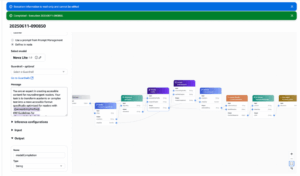

This article discusses existing threats at the prompt level and describes various security mechanisms to mitigate these threats. In our example, we work with Anthropic Claude at Amazon Bedrock, implementing prompt templates that allow us to impose security measures against common threats such as prompt injection. These templates are interoperable and can be customized for other LLMs.

LLMs are trained on an unprecedented scale, with some of the largest models comprising billions of parameters and consuming terabytes of textual data from various sources. This massive scale enables LLMs to develop a rich and nuanced understanding of language, capturing nuances, idioms, and contextual cues that were previously challenging for AI systems.

To utilize these models, services like Amazon Bedrock can be utilized, which provides access to a variety of core models from both Amazon and third-party providers, including Anthropic, Cohere, Meta, among others. Amazon Bedrock allows for experimenting with cutting-edge models, customizing them, and integrating them into generative AI-powered solutions through a single API.

A significant limitation of LLMs is their inability to incorporate knowledge beyond what is present in their training data. Although LLMs excel at capturing patterns and generating coherent text, they often lack access to up-to-date or specialized information, limiting their usefulness in real-world applications. One use case that addresses this limitation is Retrieval-Augmented Generation (RAG). RAG combines the power of LLMs with a retrieval component that can access and incorporate relevant information from external sources, such as knowledge bases, intelligent search systems, and vector databases.

At its core, RAG employs a two-stage process. In the first stage, a retrieval component identifies and retrieves relevant documents or textual passages based on the input query. These are used to augment the original prompt content and passed to an LLM. The LLM then generates a response to the augmented prompt based on the query and retrieved information. This hybrid approach enables RAG to leverage the strengths of both LLMs and retrieval systems, allowing for more precise and informed responses that incorporate up-to-date and specialized knowledge.

LLMs and user-oriented RAG applications, such as question-answering chatbots, are exposed to many security vulnerabilities. Central to the responsible use of LLMs is the mitigation of prompt-level threats through the use of security measures. These can be applied for content filtering and themes in applications powered by Amazon Bedrock, as well as the mitigation of prompt threats through user input labeling and filtering.

Large language models should also employ prompt engineering in AI development processes along with security measures to mitigate prompt injection vulnerabilities and uphold principles of fairness, transparency, and privacy in LLM applications. All of these safeguards, used together, help build a powerful and secure application powered by LLM, protected against common security threats.

In conclusion, we proposed a series of prompt engineering security measures and recommendations to mitigate prompt-level threats, demonstrating the effectiveness of these measures in our security evaluation. We encourage readers to apply these learnings and begin building safer generative AI solutions on Amazon Bedrock.

via: AWS machine learning blog

Source: MiMub in Spanish