Here’s the translation into American English:

—

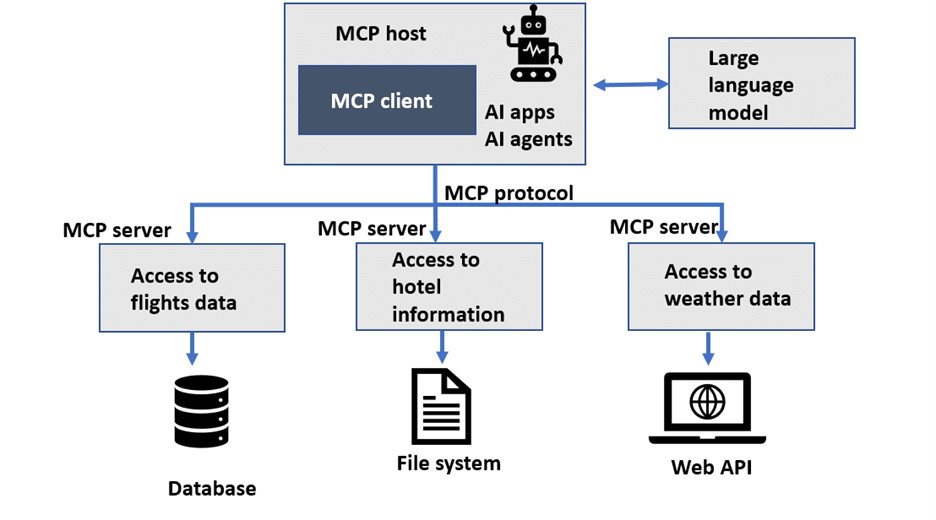

Organizations that implement agent systems face various challenges, particularly in integrating multiple tools and orchestrating workflows. An agent uses function calls to interact with external tools, such as APIs or databases, to perform specific actions and obtain information that it does not have internally. This integration can complicate the scaling and reuse of tools across the organization, requiring clients who wish to deploy agents at scale to find a consistent way to integrate these tools, regardless of the orchestration framework used.

In this scenario, the Model Context Protocol (MCP) emerges as a solution to standardize interaction between channels, agents, tools, and customer data. This standardization enhances the user experience by eliminating fragmentation among systems. As a result, agent developers can focus on selecting and utilizing the most appropriate tools without needing to spend time creating custom integrations.

To implement the MCP, it is essential to have a scalable infrastructure to host the servers, as well as an environment capable of supporting large language models (LLMs). Amazon SageMaker AI presents itself as a viable option for hosting these LLMs, alleviating concerns about scaling and managing complex tasks. Additionally, MCP servers can be deployed on various computing solutions offered by AWS, such as Amazon EC2, Amazon ECS, Amazon EKS, and AWS Lambda.

The MCP represents a significant advancement over traditional microservices architectures. While microservices offer greater modularity, they often require complex and separate integrations for each service. In contrast, the MCP provides a bidirectional real-time communication interface, facilitating the connection of artificial intelligence systems to external tools, API services, and data sources, which is essential for AI applications that demand robust and modular access to multiple resources.

Among the options for implementing MCP servers are FastAPI and FastMCP. FastMCP is ideal for rapid prototyping and situations where speed is crucial, while FastAPI offers greater flexibility and control, making it more suitable for complex workflows.

With this architecture, organizations can optimize their AI integration processes, reducing the need for custom solutions and avoiding maintenance hurdles. The ability to connect models with critical systems becomes increasingly valuable, allowing for a transformation in loan processing, accelerating operations, and providing deeper business insights. As artificial intelligence advances, the MCP and the use of SageMaker AI establish a dynamic foundation for future innovations in this field.

via: MiMub in Spanish