Amazon SageMaker Pipelines has firmly positioned itself as an essential tool in the world of machine learning, making the work of data scientists and developers easier through the automation and optimization of complex workflows. This innovative platform provides a comprehensive set of features that promote rapid model development and experimentation, freeing teams from heavy infrastructure management.

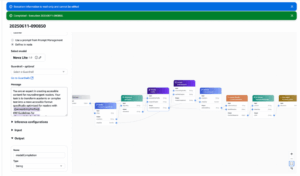

The use of a streamlined Python SDK allows SageMaker Pipelines users to orchestrate and visualize complicated workflows through SageMaker Studio, significantly improving data preparation and feature engineering. This feature not only automates model training and deployment, but also optimizes hyperparameter selection through integration with Amazon SageMaker Automatic Model Tuning, ensuring the best possible performance based on user-defined metrics.

Interest in ensemble models, which improve prediction accuracy by combining results from multiple models, continues to grow within the machine learning community. The Pipelines platform enables developers to quickly establish a comprehensive process for these models, ensuring accuracy, efficiency, and reproducibility.

A recent case highlights the use of an ensemble model trained and deployed with SageMaker Pipelines. Targeting sales representatives generating new clients in Salesforce, this model employs unsupervised learning to automatically identify specific use cases in each opportunity. Identifying these cases is crucial due to industry variation and diverse annualized revenue distribution, optimizing analytics and improving sales recommendation systems.

The approach utilizes models such as Latent Semantic Analysis (LSA), Latent Dirichlet Allocation (LDA), and BERTopic, with the latter being the most effective in overcoming common issues of the other models. The solution integrates three sequential BERTopic models in a hierarchical scheme, using techniques like UMAP for dimension reduction and BIRCH for clustering to ensure accurate and representative results.

Implementing this strategy poses certain challenges, from the need to preprocess data for improved performance to requiring a scalable computational environment capable of handling large amounts of data. The flexibility and adaptability of the pipeline are crucial for the system’s success under these conditions.

The architecture of SageMaker Studio serves as the entry point, providing an efficient and collaborative environment for creating, training, and deploying large-scale machine learning models. Through an automated workflow that includes processing, training, and modeling steps, each stage of the process is effectively coordinated.

This detailed approach to applying machine learning models underscores the power of Amazon SageMaker Pipelines, enabling organizations to overcome the challenges of automation and scalability in their artificial intelligence and machine learning initiatives.

via: MiMub in Spanish