More and more organizations are adopting an approach that involves using multiple large language models (LLMs) in the development of generative artificial intelligence applications. While a single model may be very efficient, it may not be able to optimally address a variety of use cases or meet different performance requirements. The strategy of multiple LLMs allows companies to select the right model for each task, adapt to different domains, and optimize specific aspects such as cost, latency, or quality. This results in more robust, versatile, and efficient applications that better respond to the diverse needs of users and business goals.

Implementing an application that uses multiple LLMs presents the challenge of routing each user request to the appropriate model for the task at hand. The routing logic must correctly interpret the message and assign it to one of the predefined tasks, and then direct it to the corresponding LLM. This approach allows managing various types of tasks within the same application, each with its own complexities and domains.

There are various applications that could benefit from the multiple LLM approach. For example, a marketing content creation application may require text generation, summarization, sentiment analysis, and information extraction. As applications approach the complexity of their interactions, it is crucial that they are designed to handle varying levels of task complexity based on the user’s level. A text summarization AI assistant, for instance, must effectively handle both simple and complex queries depending on the type of document it is working with.

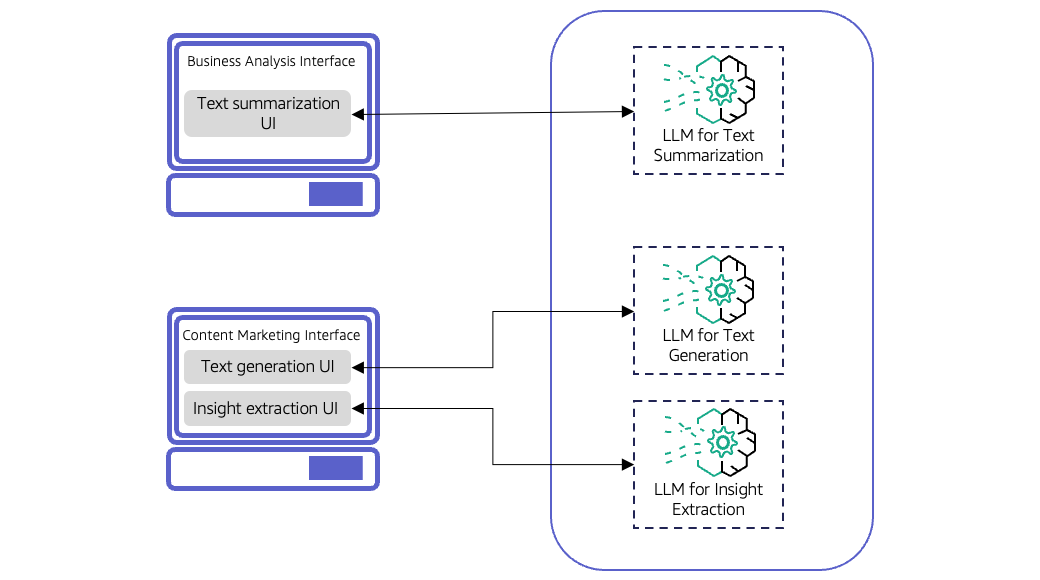

Among the main approaches for routing requests to different LLMs, static and dynamic routing stand out. Static routing can be effective by implementing different user interface (UI) components for each task, allowing for a modular and flexible design. However, adding new tasks may require the development of additional components. On the other hand, dynamic routing, used in virtual assistants and chatbots, intercepts requests through a single UI component and directs them to the LLM that best fits the requested task.

Dynamic routing techniques include LLM-assisted routing, which uses a classifier LLM to make routing decisions, offering finer classifications at a higher cost. Another technique is semantic routing, which uses numerical vectors to represent input messages and determine their similarities with predefined task categories, particularly effective for applications that require constant adaptation to new task categories.

A hybrid approach that combines both techniques can also be chosen, providing more robust and adaptive routing to the diverse needs of users. Implementing a dynamic routing system requires careful analysis of costs, latency, and maintenance complexity, as well as constant evaluation of the performance of the models used.

Organizations are starting to explore platforms like Amazon Bedrock, which offers a fully managed LLM service, facilitating intelligent routing of requests to different models. Amazon Bedrock allows developers to focus on building applications while optimizing costs and response quality, sometimes achieving up to a 30% reduction in operating costs.

In conclusion, the use of multiple LLMs in generative artificial intelligence applications not only expands organizational capabilities but also enhances the user experience. However, the success of its implementation will depend on careful consideration of its various dynamics and needs.

Referrer: MiMub in Spanish