Sure! Here’s the translation to American English:

—

Advances in artificial intelligence (AI) are revolutionizing the way development teams conduct their workflows on platforms like GitHub. Large language models (LLMs) are gaining prominence, offering innovative solutions to real-world problems, although their practical implementation faces numerous challenges.

To bridge the gap between theory and practice, AI agents are emerging as a key technology. These agents utilize base models available through Amazon Bedrock, which serve as a cognitive engine to interpret human requests and generate appropriate responses. The integration of orchestration layers and agent frameworks enables organizations to build applications that not only understand context but also make decisions and execute actions autonomously.

For teams looking to optimize processes without the need to develop custom agents, Amazon Q Developer on GitHub offers native integration. This tool equips users with the capabilities to efficiently generate, review, and transform code, while those with specific requirements can opt to create custom solutions using Amazon Bedrock and various agent frameworks.

Despite significant progress, the development and deployment of AI agents face barriers that limit their effectiveness and broader adoption. The lack of standardization in tool integration is one of the most notable obstacles, forcing developers to manage numerous custom integrations and edge cases.

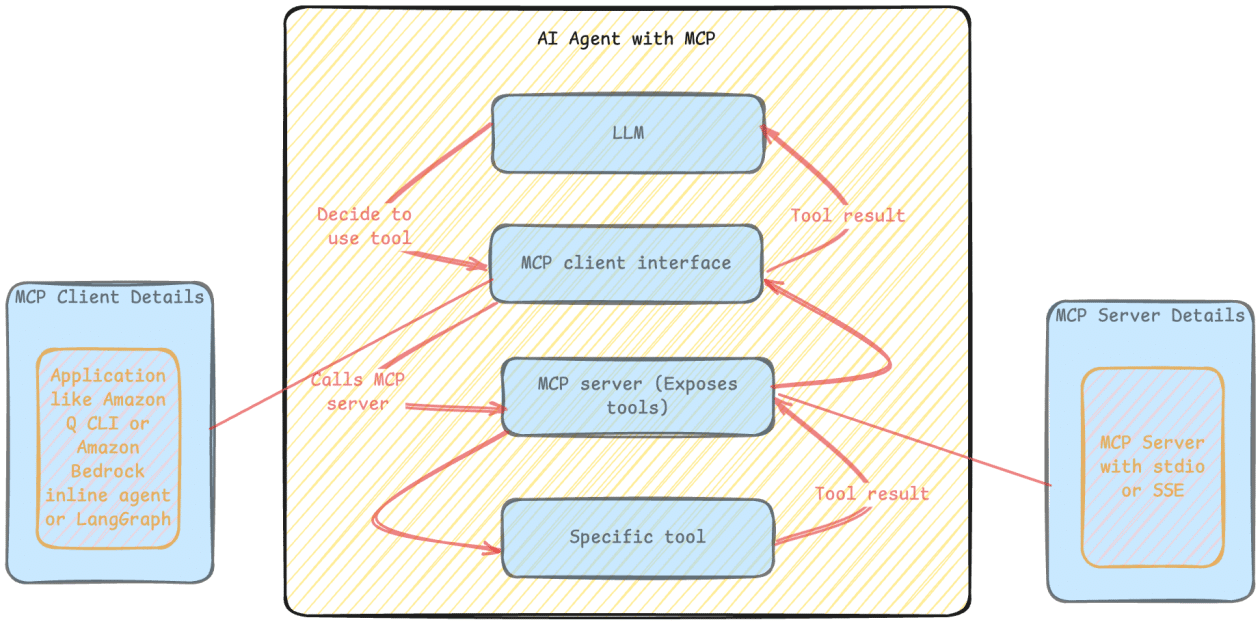

The Model Context Protocol (MCP) presents a solution to these limitations, offering a standardized framework that redefines context management and tool integration in business environments. This allows for reduced development effort and enables more complex usage patterns, such as tool chaining and parallel executions.

The combination of Amazon Bedrock models, the context management capabilities of the MCP, and the orchestration provided by LangGraph results in the creation of agents capable of performing increasingly complex tasks with greater reliability. This could transform the developer experience by enabling issues on GitHub to be analyzed and resolved automatically overnight.

The recent evolution of LLMs, particularly in code generation, is changing the game. By using agents, teams can automate simple tasks like bug fixing or dependency updates. Amazon Bedrock, with its unified API and high performance, along with LangGraph, which facilitates workflow orchestration, are tools that are enabling this transition toward greater automation in development.

The MCP approach allows secure and bidirectional connections between data sources and AI-driven tools, simplifying task automation. This seamless integration with GitHub promises to transform development workflows, enhancing not only productivity but also addressing the existing limitations of AI agents.

With the potential for collaboration between humans and machines, the future of automation in development emerges full of opportunities, as long as related challenges are effectively addressed.

via: MiMub in Spanish