Earnings calls are crucial events that provide transparency about a company’s financial health and prospects. These reports detail the company’s finances during a specific period, including revenue, net income, earnings per share, balance sheet, and cash flow. Additionally, earnings calls are live conferences where executives provide an overview of the results, discuss achievements and challenges, and provide guidance for future periods.

These disclosures are vital for capital markets, significantly influencing stock prices. Investors and analysts closely monitor key metrics such as revenue growth, earnings per share, margins, cash flow, and projections to assess performance compared to peers and industry trends. Growth rate and profit margins influence the premium and multiplier that investors are willing to pay for a company’s stock, ultimately affecting stock returns and price movements.

Additionally, earnings calls allow investors to look for clues about a company’s future. Companies often release information about new products, cutting-edge technologies, mergers and acquisitions, and investments in new themes and market trends during these events. These details can signal potential growth opportunities for investors, analysts, and portfolio managers.

Traditionally, earnings call scripts have followed similar templates, making it a repetitive task to generate them from scratch each time. On the other hand, generative artificial intelligence models can learn these templates and produce coherent scripts when fed with quarterly financial data. With generative artificial intelligence, companies can streamline the process of creating first drafts of earnings call scripts for a new quarter using repeatable templates and information about performance and business highlights. The initial draft of a script generated by a large language model (LLM) can then be refined and personalized using feedback from company executives.

Amazon Bedrock offers a simple way to build and scale generative artificial intelligence applications with base models (FMs) and LLMs. Amazon Bedrock is a fully managed service that offers a selection of high-performance FMs from leading artificial intelligence companies such as AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon. Customizing models can offer differentiated and personalized user experiences. To customize models for specific tasks, FMs can be finely-tuned using their own labeled datasets in just a few quick steps.

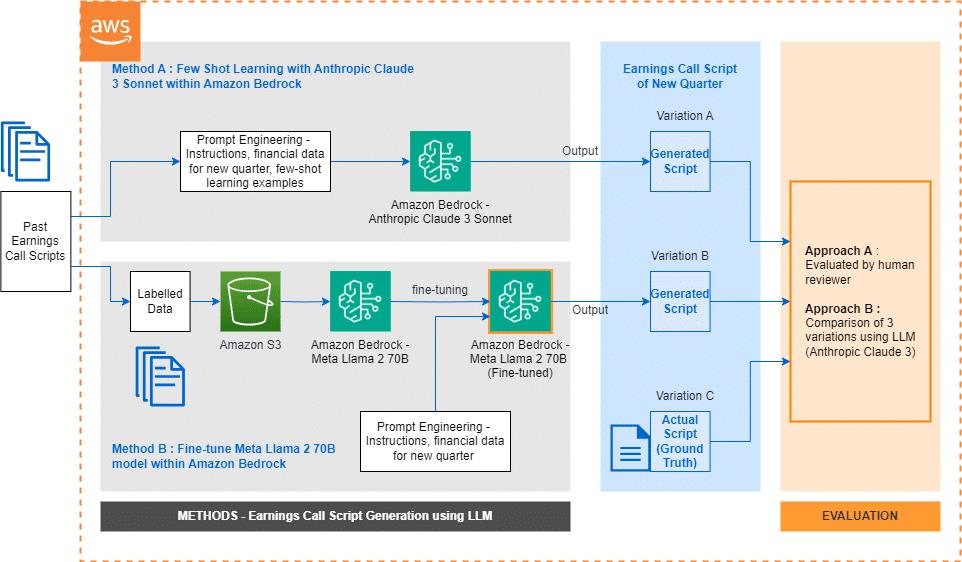

This article demonstrates how to generate the first draft of an earnings call script using LLMs. We show two methods for generating an earnings call script with LLMs: few-shot learning and fine-tuning. We evaluate the generated scripts and methods applied from different dimensions: completeness, hallucinations, writing style, ease of use and cost, and present our findings.

We apply two methods to generate the first draft of an earnings call script for the new quarter using LLMs:

Prompt engineering with few-shot learning: We use examples of past earnings call scripts with Anthropic Claude 3 Sonnet on Amazon Bedrock to generate an earnings call script for a new quarter.

Fine-tuning: We finely-tune Meta Llama 2 70B on Amazon Bedrock using labeled input/output data from past earnings call scripts and use the customized model to generate an earnings call script for a new quarter.

Both methods involve using a consistent dataset of earnings call transcripts across multiple quarters. We use several years of past quarterly earnings call transcripts, reserving one quarter, which was used as the ground truth for testing and comparison.

We evaluate the transcripts of the earnings call generated from both methods using two different approaches:

Evaluation by a human reviewer

Evaluation comparing three variations using an LLM (Anthropic Claude 3 Sonnet)

A human reviewer evaluated completeness, appearance of hallucinations in the texts, writing style, ease of use, and implementation and maintenance cost of the methods.

We tested the following variations:

Variation A: Earnings call transcript generated by few-shot learning with Anthropic Claude v3 Sonnet

Variation B: Earnings call transcript generated by fine-tuning Meta Llama 70B

Variation C: Actual earnings call transcript for the quarter

The evaluations showed that, although key financial points are similar, there are nuances in the depth of details provided and the narrative and comment style among the different variations.

Generating high-quality drafts of earnings call scripts using LLMs is a promising approach that can streamline the process for companies. Both methods, prompt engineering and fine-tuning, demonstrated the ability to produce scripts that cover key financial metrics, business updates, and future guidance. Each method has its own particularities in terms of completeness, hallucinations, writing style, ease of implementation, and cost that companies should evaluate based on their specific needs and priorities.

via: MiMub in Spanish