In a strategic move to enhance its capabilities in artificial intelligence and natural language processing, Salesforce has launched a series of initiatives focused on optimizing large language models (LLMs). Led by the Model Serving team, this effort involves integrating advanced solutions and collaborating with renowned technology providers to push the boundaries of AI in business applications.

The team not only covers conventional machine learning models, but also explores areas such as generative artificial intelligence, speech recognition, and computer vision. One of the team’s core objectives is to comprehensively manage the life cycle of models, which includes gathering requirements, optimizing, and scaling the AI models developed by the company’s data scientists. In this process, minimizing latency and maximizing performance are prioritized, especially when deploying these models across multiple regions on Amazon Web Services (AWS).

However, Salesforce faces several significant challenges in this implementation. Primarily, the challenge lies in balancing latency and performance without compromising cost efficiency, a crucial aspect in a business environment that demands quick and accurate responses. Additionally, performance optimization, security, and protection of customer data are essential for the success of these initiatives.

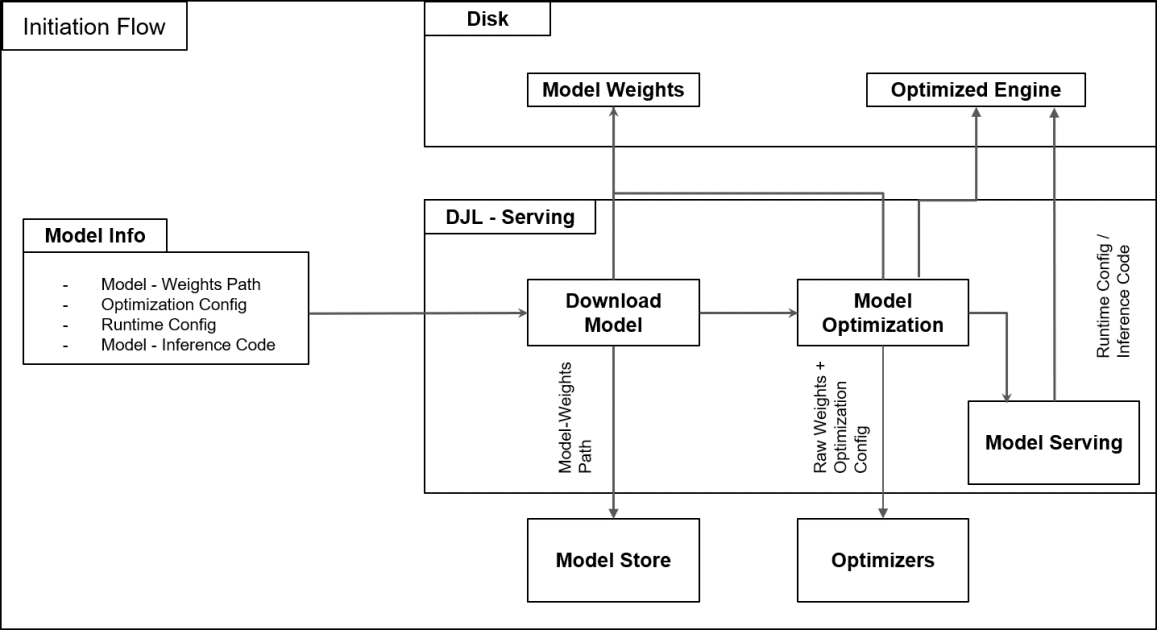

To address these challenges, the team has designed an AWS hosting framework that simplifies model life cycle management. Using Amazon SageMaker AI, tools have been implemented to facilitate distributed inferences and deployments of multiple models, helping to avoid memory bottlenecks and reduce hardware costs. SageMaker also enables the use of deep learning containers that accelerate development and deployment, allowing engineers to focus on model optimization rather than infrastructure configurations.

Furthermore, Salesforce has adopted best practices for deployment on SageMaker AI, optimizing GPU usage and improving memory allocation. This results in efficient and rapid deployment of models that meet high availability and low latency standards.

Through a modular approach, the team ensures that improvements in one project do not interfere with other ongoing developments. They are also researching new technologies and optimization techniques to improve cost and energy efficiency. Ongoing collaborations with the open-source community and cloud providers like AWS are crucial for incorporating innovations into their processes.

In terms of security, Salesforce maintains rigorous standards from the beginning of the development cycle, implementing encryption mechanisms and access controls to protect sensitive information. Through automated testing, they ensure that the speed of deployment does not compromise data security.

As the demand for generative AI grows in Salesforce, the team is committed to continuously improving its deployment infrastructure, exploring innovative methodologies and technologies to lead in the dynamic field of artificial intelligence.

Source: MiMub in Spanish