Here’s the translation into American English:

—

Generative artificial intelligence is rapidly transforming various industries around the world, allowing companies to deliver exceptional experiences to their customers and streamline processes. However, this technological revolution raises significant questions about the responsible use of these tools.

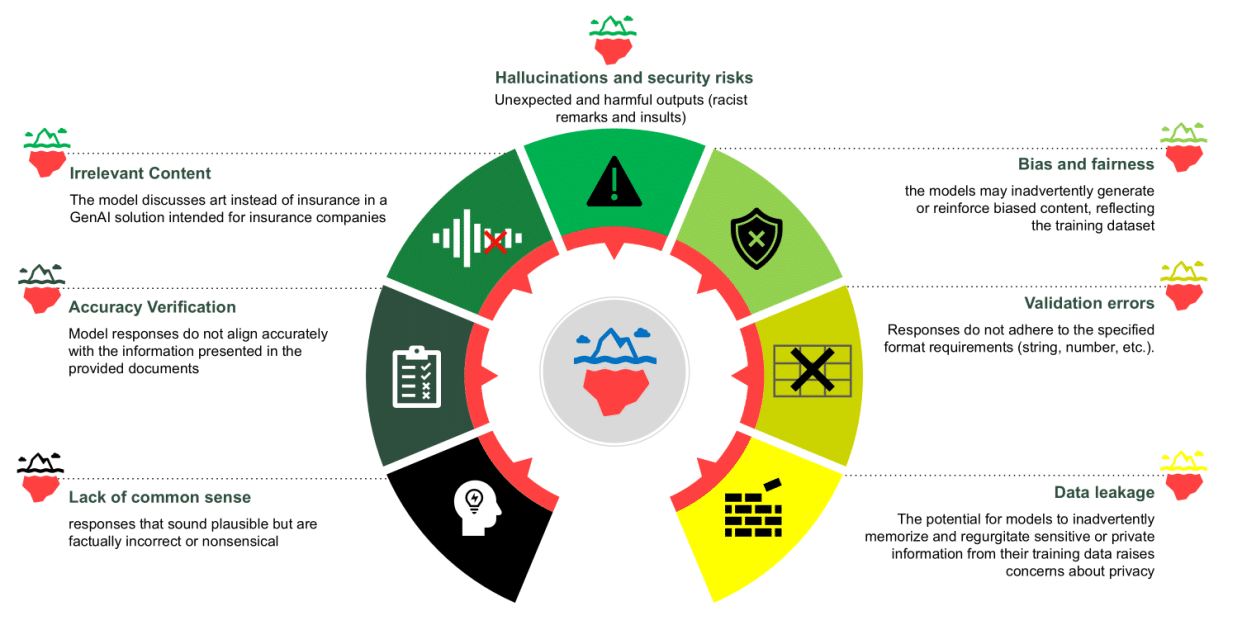

Although responsible artificial intelligence has been a central focus in the sector for the past decade, the increasing complexity of generative models brings unique challenges. Risks such as “hallucinations,” lack of control, intellectual property violations, and unintended harmful behaviors require proactive attention. To maximize the potential of generative AI and minimize these risks, it is essential to adopt comprehensive mitigation techniques and controls in its development.

A key methodology in this context is “red teaming,” which simulates adverse conditions to assess systems. In the realm of generative AI, this involves subjecting models to rigorous testing to identify weaknesses and evaluate their resilience, helping to develop functional, safe, and reliable systems. Integrating red teaming into the AI lifecycle allows for anticipating threats and fostering trust in the solutions offered.

Generative AI systems, while revolutionary, present security challenges that require specialized approaches. The inherent vulnerabilities of these models include generating hallucinated responses, inappropriate content, and unauthorized disclosure of sensitive data. Such risks can be exploited by adversaries through various techniques, such as command injection.

Data Reply has partnered with AWS to provide support and best practices in integrating responsible AI and red teaming into organizations’ workflows. This collaboration aims to mitigate unexpected risks, comply with emerging regulations, and reduce the likelihood of data breaches or malicious use of the models.

To tackle these challenges, Data Reply has developed the Red Teaming Playground, a testing environment that combines various open-source tools with AWS services. This space allows AI creators to explore scenarios and evaluate model reactions under adverse conditions, a crucial approach for identifying risks and enhancing the robustness and security of generative AI systems.

An exemplary use case is the mental health triage assistant, which requires careful handling of sensitive topics. By clearly defining the use case and establishing quality expectations, the model can be guided to respond, redirect, or provide safe answers appropriately.

Continuous improvement in implementing responsible AI policies is fundamental. The collaboration between Data Reply and AWS focuses on industrializing efforts that span from fairness checks to security testing, helping organizations stay ahead of emerging threats and evolving standards.

via: MiMub in Spanish