Here’s the translation into American English:

—

In recent years, the healthcare and life sciences sectors have been undergoing a significant transformation thanks to the use of generative artificial intelligence agents. These innovations are changing the way drug discovery, medical device manufacturing, and patient care are conducted. However, in highly regulated environments like the pharmaceutical industry, compliance with Good Practices (GxP) is crucial. This includes various regulations such as Good Clinical Practices (GCP), Good Laboratory Practices (GLP), and Good Manufacturing Practices (GMP), which ensure product quality and patient safety.

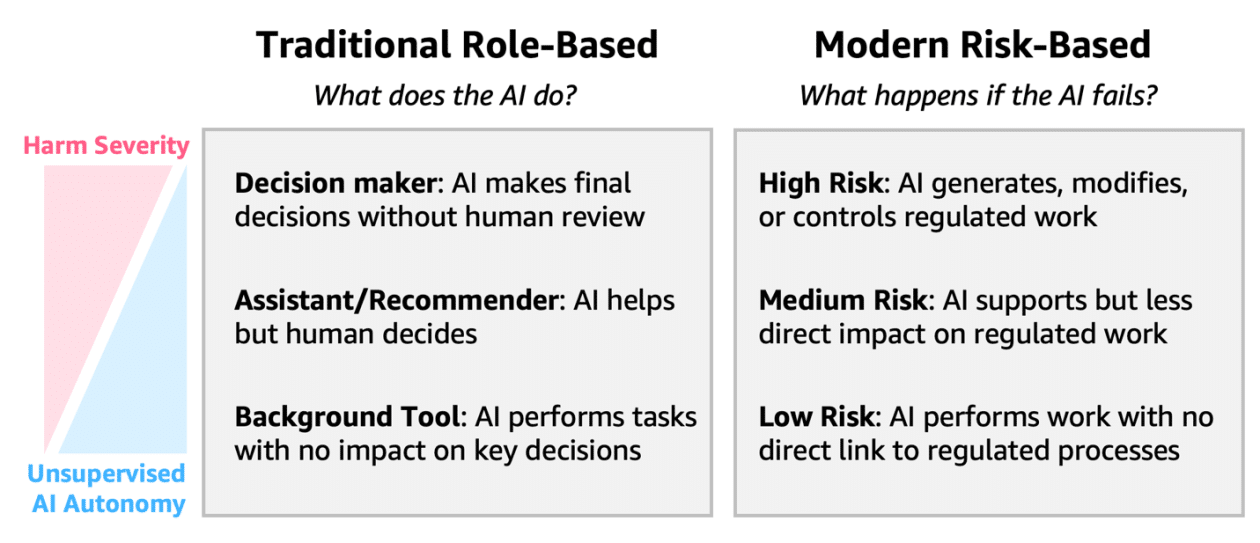

The development of artificial intelligence agents in these contexts must be approached with attention to the risk profile each system entails. Generative intelligence presents unique challenges, such as the need for explainability, uncertainty in outcomes, and continuous learning. These characteristics require comprehensive risk assessments, unlike the more simplified validation approaches that have traditionally been used. The lack of alignment between traditional GxP compliance methods and modern AI capabilities could hinder implementation, drive up validation costs, and stifle innovation, limiting potential benefits both in product development and in patient care.

The regulatory framework for GxP compliance is evolving to address these particularities of artificial intelligence. Traditional Computer System Validation (CSV) approaches are being complemented by new Computer Software Assurance (CSA) methodologies that promote validation methods based on a risk approach, adapting to the complexity and actual impact of each tool.

Implementing effective controls for AI systems in GxP contexts involves assessing risks based not only on technological characteristics but also on the operational context in which they operate. The guidance issued by the FDA recommends classifying risks based on three factors: the severity of potential harm, the likelihood of occurrence, and the detectability of failures. This implementation framework allows organizations to balance the need for innovation with regulatory compliance, enabling them to customize validation efforts according to risk levels, rather than applying a uniform approach to all such implementations.

In this context, the Amazon Web Services (AWS) platform has emerged as a key ally in meeting regulatory requirements. With a qualified infrastructure and an extensive range of services, AWS helps maintain an organized and consistent approach to compliance, offering well-documented procedures for user management and role configuration, thereby enhancing access management and record-keeping.

To properly implement AI agents in GxP environments, it is essential to understand the model of shared responsibility between organizations and AWS. While AWS ensures the protection of the infrastructure supporting its cloud services, organizations must focus on creating effective solutions that comply with current regulations.

Establishing well-defined acceptance criteria and validation protocols according to the intended use of AI systems is crucial. As the adoption of artificial intelligence agents progresses, organizations must consider both the associated risk and the operational context, ensuring that their solutions align with GxP standards to foster innovation in the healthcare sector.

Source: MiMub in Spanish