Sure! Here’s the translation to American English:

—

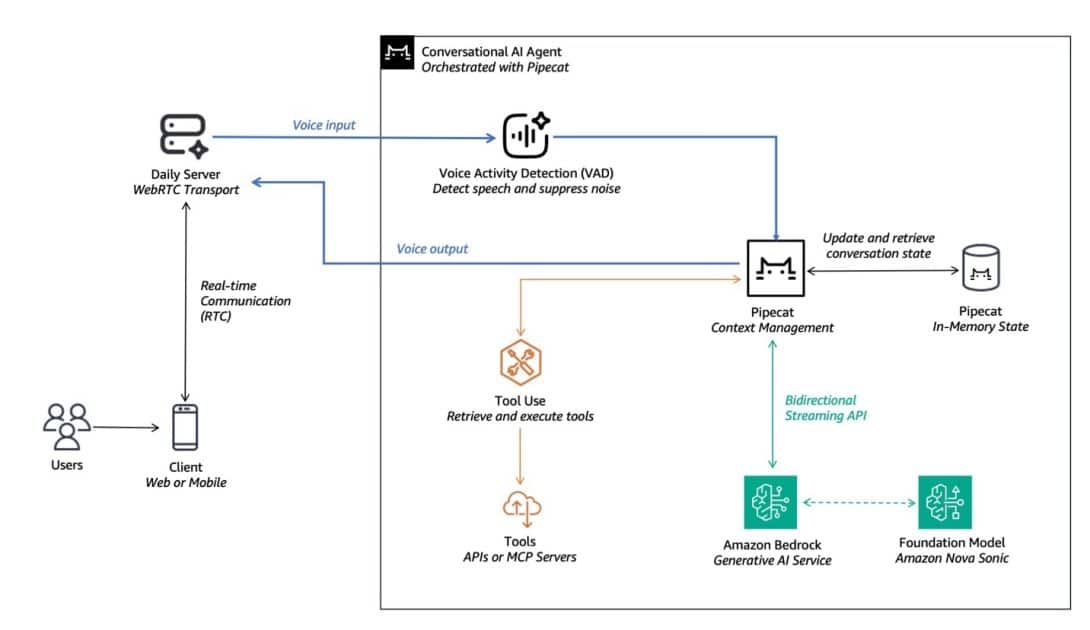

Voice artificial intelligence is transforming the way we interact with technology, enabling smoother and more natural conversations. Recently, an innovative approach has been introduced that combines Amazon Bedrock and Pipecat, an open-source framework designed to develop voice and multimodal conversational agents. This approach promises to simplify the creation of artificial intelligence applications that mimic human communication.

In the first part of a series of posts, various use cases for voice agents were presented, along with an explanation of a strategy called cascading models. This method allows for the orchestration of multiple components to design more effective voice agents. The second installment focused on Amazon Nova Sonic, a voice-to-voice model that allows real-time interactions with human-like quality and a significant reduction in latency.

Nova Sonic integrates functionalities such as automatic speech recognition, natural language processing, and text-to-speech conversion, all within a single model that facilitates smoother and more contextualized conversations. Additionally, it allows the use of tools and the retrieval of information from the Amazon Bedrock knowledge base, simplifying development and improving efficiency in conversational environments.

The collaboration between Amazon Web Services (AWS) and the Pipecat team has been essential for integrating these advanced capabilities, making it easier for developers to implement intelligent voice systems. Kwindla Hultman Kramer, CEO of Daily.co and creator of Pipecat, has highlighted this advancement as a significant milestone in real-time voice artificial intelligence, as agents not only understand questions but can also perform meaningful actions, such as scheduling appointments.

For interested developers, code examples and implementation guides have been provided to get started with Amazon Nova Sonic and Pipecat. This customization allows for adjusting conversation logic and model selection based on the specific needs of each project.

A recent practical example showcased an intelligent health assistant interacting in real-time, highlighting the broad applications that voice artificial intelligence can have in everyday life.

In summary, the combination of Pipecat with Amazon Bedrock models is making it easier to create intelligent voice agents. Recent posts have addressed two fundamental approaches to developing these agents, emphasizing how simplifying models can result in more effective interactions and more robust artificial intelligence applications. All signs point to the future of conversational artificial intelligence continuing to advance and expand its possibilities across various sectors.

—

Let me know if there’s anything else you need!

Referrer: MiMub in Spanish