Generative artificial intelligence models have opened up new possibilities for automating and improving software development workflows. Particularly, the emerging ability of these models to produce code based on natural language prompts has revolutionized the way developers and DevOps professionals approach their work, significantly increasing their efficiency. In this article, we provide an overview of how to leverage advancements in large language models (LLMs) using Amazon Bedrock to assist developers at various stages of the software development lifecycle (SDLC).

Amazon Bedrock is a fully managed service that offers a selection of high-performance base models from leading AI companies, such as AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon itself, through a single API. Additionally, Amazon Bedrock provides a comprehensive set of capabilities to build generative AI applications with security, privacy, and responsible AI practices.

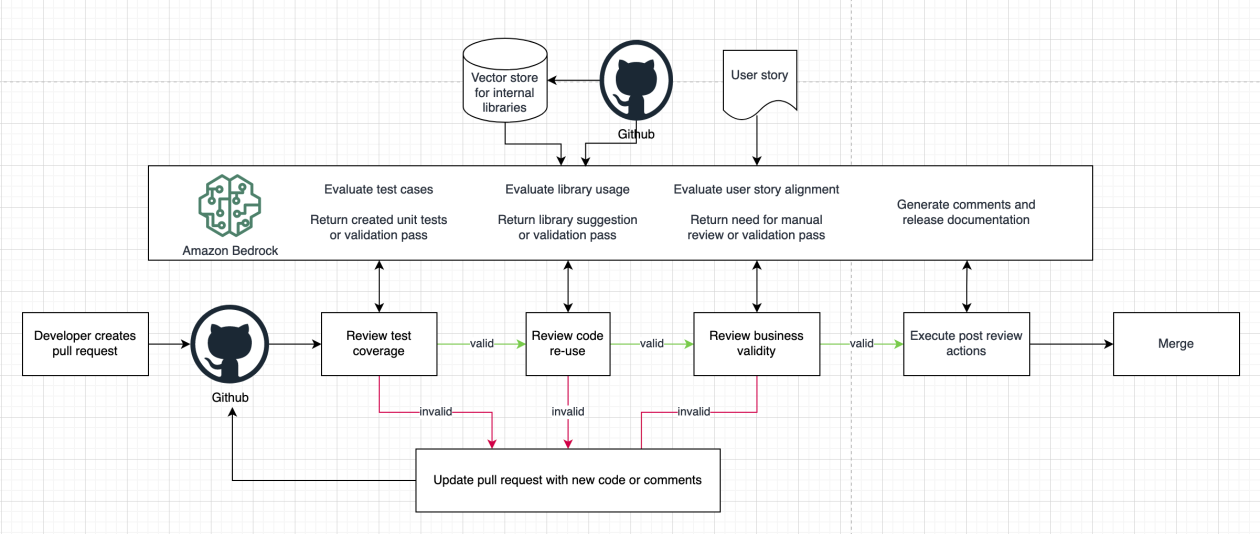

The proposed process architecture in this article integrates generative artificial intelligence into key areas of the SDLC to enhance development efficiency and speed. The main objective is for developers to create their own systems for augmenting, writing, and auditing code using models within Amazon Bedrock, rather than relying on pre-configured coding assistants. Among other topics, the following are discussed:

– A use case for coding assistants to help developers write code faster through suggestions.

– How to utilize code understanding capabilities of LLMs to generate insights and recommendations.

– A use case for automatic application generation to create functional code and automatically deploy changes in a work environment.

It’s crucial to consider some technical options when choosing the model and strategy to implement this functionality at each stage. For example, the choice of the base model for the task is essential, as each has been trained on a different data corpus, affecting performance in different tasks. Models like Anthropic’s Claude 3 in Amazon Bedrock can effectively write code in many common programming languages right out of the box, while other models may require additional customization, leading to complexity and increased technical effort.

Coding assistants are a very popular use case with numerous examples. AWS offers several services to assist developers, either through online completion tools like Amazon CodeWhisperer or through natural language interaction with Amazon Q. A key advantage of an assistant over online generation is that it allows starting new projects based on simple descriptions, providing sample code and recommendations on which frameworks to use.

LLM code understanding can increase the productivity of individual developers by inferring the meaning and functionality of code, improving reliability, efficiency, security, and speed of the development process. This approach allows for documentation, code review, and the application of best practices more effectively, and facilitates the integration of new developers into the team.

Application generation can be extended to create complete implementations. In the traditional SDLC, a human creates a set of requirements, designs the application, writes the code, creates tests, and receives feedback. AI models can iterate development by feeding model outputs back as inputs, creating functional applications based on natural language prompts.

Lastly, it is crucial to maintain a modular and flexible system to adapt to rapid changes in technology. Leveraging the productivity gains offered by artificial intelligence will be crucial in maintaining competitiveness in the near future.

via: MiMub in Spanish